In a previous article, we shared how Sonar scores on the Top 3 Java SAST Benchmarks:

When analyzing the benchmarks with SAST products available in the market, you may come across numerous results, making it challenging to determine what issues SAST products are expected to identify.

For this reason, we have built a ground truth dataset that contains a comprehensive list of issues that should be detected in the code. However, upon careful examination, you may notice that certain test cases are not included in this dataset.

Today, we would like to draw attention to two specific categories of test cases that we have excluded and discuss the reasons why SAST products are unable to detect them.

Fake vulnerabilities

WebGoat is a dynamic learning platform that offers a collection of lessons, each designed to illustrate a specific category of vulnerabilities and educate users on how common web security flaws can be exploited. While it is not a benchmark, we can think of each challenge within WebGoat as a test case where vulnerabilities should be detected in the code. In theory, all vulnerabilities should be detectable using SAST techniques. However, in practice, SAST tools are only able to identify a subset of vulnerabilities. Let's have a look at a lesson where nothing is detected and try to understand the root cause.

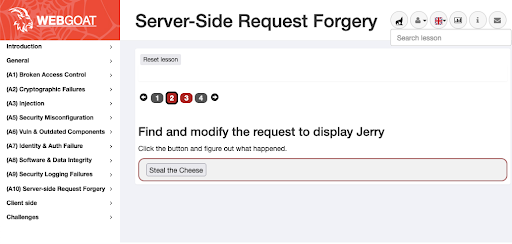

WebGoat user interface looks like this:

On the left panel, a list of lessons is presented, and one of the root items catches our attention with its familiar name: Server-Side Request Forgery (SSRF). In this particular lesson, at step 2, users are supposed to exploit an SSRF vulnerability by manipulating the server's request to retrieve an image. The solution is to modify the url parameter so that it fetches jerry.png instead of tom.png.

url=images%2Fjerry.pngSonar has a rule specifically designed to detect this vulnerability (S5144), but it reports 0 issues in this case. What is the reason behind this false negative? To shed light on this matter, let's take a closer look at the code for this lesson:

@RestController

public class SSRFTask1 extends AssignmentEndpoint {

@PostMapping("/SSRF/task1")

@ResponseBody

public AttackResult completed(@RequestParam String url) {

return stealTheCheese(url);

}

protected AttackResult stealTheCheese(String url) {

try {

StringBuilder html = new StringBuilder();

if (url.matches("images/tom.png")) {

html.append(

"<img class=\"image\" alt=\"Tom\" src=\"images/tom.png\" width=\"25%\""

+ " height=\"25%\">");

return failed(this).feedback("ssrf.tom").output(html.toString()).build();

} else if (url.matches("images/jerry.png")) { // `url` is compared to the an expected value

html.append(

"<img class=\"image\" alt=\"Jerry\" src=\"images/jerry.png\" width=\"25%\""

+ " height=\"25%\">");

return success(this).feedback("ssrf.success").output(html.toString()).build();

} else {

html.append("<img class=\"image\" alt=\"Silly Cat\" src=\"images/cat.jpg\">");

return failed(this).feedback("ssrf.failure").output(html.toString()).build();

}

} catch (Exception e) {

e.printStackTrace();

return failed(this).output(e.getMessage()).build();

}

}

}Typically, Server-Side Request Forgeries involve manipulating the URL to which the server sends requests. However, in this particular case, there is no sign of the commonly used API for sending HTTP requests, such as java.net.HttpURLConnection. Instead, the code simply verifies that the user input url matches images/jerry.png. When trying to send something other than images/jerry.png and images/tom.png the application outputs “You need to stick to the game plan!”.

Sonar will not detect an SSRF vulnerability in this case because the vulnerability is purely faked. Consequently, we did not include this as an expected issue in the ground truth.

OWASP WebGoat has a few other lessons that do not contain the vulnerability that is supposed to be illustrated. Out of the total 28 lessons, 11 of them are either fake or based on business logic flaws (see next section).

Purely business logic vulnerabilities

The OWASP Java Benchmark was specifically designed to assess any AST tool, including DAST (Dynamic Application Security Testing). The majority of test cases within the benchmark can and should be detected by SAST engines. However, we have intentionally excluded test cases like this one:

public void doPost(HttpServletRequest request, HttpServletResponse response)

throws ServletException, IOException {

response.setContentType("text/html;charset=UTF-8");

Map<String, String[]> map = request.getParameterMap();

String param = "";

if (!map.isEmpty()) {

String[] values = map.get("BenchmarkTest00031");

if (values != null) param = values[0];

}

request.getSession().putValue("userid", param);

}In this particular test case, the user has control over the value of the variable param, which is then used to set a session variable called userid on the server side. The benchmark maintainer has classified this test case as “CWE-501: Trust Boundary Violation”. To provide a more precise definition, this problem occurs when programs blur the line between what is trusted and what is untrusted. As a result, developers inevitably lose track of which data has been validated and which has not.

Although the code for this test case is artificial and the userid session variable is not used after that, we can imagine a scenario where the application relies on userid for user authentication. In such a case, changing the value of userid could potentially allow attackers to impersonate another user. As this is a serious security concern, it may be desirable to detect such vulnerabilities.

The technology used to detect if user inputs are used in sensitive APIs is known as "taint analysis.". In this specific case, using taint analysis would make it relatively easy to identify patterns where a user-controlled value enters the second argument of methods like javax.servlet.http.HttpSession#putValue. By doing so, it would make it possible to detect this test case. However, this approach could also raise issues with very common development practices.

Let's now consider a slightly different test case:

public void doPost(HttpServletRequest request, HttpServletResponse response)

throws ServletException, IOException {

response.setContentType("text/html;charset=UTF-8");

Map<String, String[]> map = request.getParameterMap();

String param = "";

if (!map.isEmpty()) {

String[] values = map.get("BenchmarkTest00031");

if (values != null) param = values[0];

}

request.getSession().putValue("lang", param); //The session variable name is now different

}The session variable name has changed and it’s now called lang. If a SAST tool implements the logic we described earlier, it will also detect a vulnerability in this example. However, If the lang variable is used to store the language preference of the user, it is likely not a vulnerability, and detecting it would result in a false-positive.

To detect the safe from the unsafe case, an option would be to apply keyword-based heuristics: only raise an issue if the key contains the word “secret”, “id”, “session” etc. Keyword-based heuristics are very fragile though as they usually only work for one language.

Understanding the business logic of the application is crucial in determining whether setting userid with arbitrary values is safe or not. SAST tools have a limited understanding of the application's business logic because it varies from one application to another, and inferring it from the code is often difficult or impossible.

This is why purely business logic vulnerabilities are not typically detected by static analysis. Attempting to detect them would inevitably lead to false positives on safe code and provide no value to developers.

As a result, we have excluded approximately 7% of purely business logic test cases, including those classified as 'Trust Boundary', from the OWASP Java Benchmark.

Summary

Analyzing benchmarks and vulnerable applications is a convenient way to assess SAST product's capabilities. These projects contain many relevant test cases that help us identify and overcome the limits of our analyzers. Go to SonarQube Cloud and analyze one of the 3 benchmarks yourself to make your own opinion.

Benchmarks should not be considered a universal truth as they are rarely designed for the sole purpose of measuring the performance of SAST. In some cases, vulnerabilities may be intentionally faked, making them impossible to detect.

SAST tools excel at finding issues that manifest at the code level. They provide limited value when it comes to detecting issues where part of the information is in the code, while the rest of the context is not. Vulnerability classes such as authentication or access-control flaws often require the human eye and a deep understanding of the application’s logic to be detected and remediated.