Last August, we were fortunate to present our work on the security of Visual Studio Code at DEF CON 31, one of the biggest hacker conventions in the world. We received great feedback on our presentation, and the organizers recently released a recording for those who couldn't attend the event:

We wanted to share the content of our talk in a blog post to make it more discoverable and accessible to people who prefer learning with text over videos. Stay tuned for two more publications on November 14th and November 21st, this time bringing new content and new vulnerabilities!

Introduction

The journey of most developers starts with their code editor—and they usually have strong opinions on this topic. Based on StackOverflow's latest developer survey, we learn that about 74% of respondents work or "want to work with" Visual Studio Code (often shortened as VSCode), compared to about 28% of the IntelliJ suite, and more "surprising" numbers like 8.5% for Nano or 22.6% for Vim. Overall, it shows that VSCode is far ahead in this market.

Let's say someone just sent us an archive via email. You extract it and open the folder in your favorite code editor, Visual Studio Code, to inspect it. That should be safe because there are only text files, right?

This calculator isn't expected: it shows that opening this folder somehow led to executing an arbitrary command: Here it was a calculator, but it could have been anything else and potentially malicious.

And while surprising, that's where we were left wondering: what's supposed to be unsafe? There's a general trade-off regarding the security of developer tools. We all want—and love—deep integration with the many language ecosystems, but there's no standard threat model that dictates what we can expect from these tools.

For instance, could installing an IDE weaken the security of my system because it registers privileged services? Can I open somebody else's code without any risk? In our case, that's a fair question: it's our job to read code from external sources.

Another similar question could be: Does my editor run the code I see? It may sound silly at first glance, but it happened with JEB, a Java decompiler platform often used to analyze malware running the current file in a sandbox. And how about more advanced features like VSCode's Remote Development plugin; do server and client have to trust each other? (spoiler: yes)

In general, nobody likes to be surprised when it comes to security, but unfortunately only few software are intentional and explicit about their threat models. Meanwhile, we can also notice that malicious actors increasingly target developers and other technical roles. That makes sense: they have access to source code, secrets, internal services, etc.

Among recent examples, threat actors allegedly used Plex vulnerabilities to compromise a LastPass DevOps engineer, ultimately leading to a widely covered breach. Google's Threat Analysis Group also attributed a campaign to North Korea during which influential security researchers would receive archives of Visual Studio projects in a message asking for help understanding a vulnerability. If they built it, they would be compromised.

The goal of this publication is to show you around the attack surfaces of a modern code editor like VSCode and to think about the associated risks and threat models. For this, we first need to understand how VSCode is architectured, and then we can dive deeper into several common sources of risk we saw during our research and other publications. We will cover vulnerabilities in VSCode and popular plugins, either found by the Sonar R&D team or other researchers—they will be credited accordingly in such cases.

It's also important to note that most vulnerabilities require some degree of interaction, which does not diminish their real-world impact because these are part of the usual developer workflow: open a new project or folder, click links, etc. We may call them Remote Code Execution, while a more adequate term could be Arbitrary Code Execution since the attack is carried out from local resources most of the time.

Finally, we can look into the reporting process with Microsoft, where we have an interesting anecdote to share.

Visual Studio Code's Architecture

VSCode is based on Electron, combining the powers of Node.js and the Chromium browser into an application that can use web technologies for its UI while still being able to interact with the operating system. Being powered by web technologies also means that with only a few changes, VSCode can run in your web browser! GitHub does this for their Code Spaces on https://github.dev.

VSCode is also highly extensible due to its extension ecosystem. Everybody can write and publish extensions on the official marketplace, and it is straightforward to implement support for new programming languages and frameworks using the Language Server Protocol.

Most of VSCode is open source, almost 800 thousand lines of code! However, when downloading the official builds from Microsoft, there is also a proprietary portion.

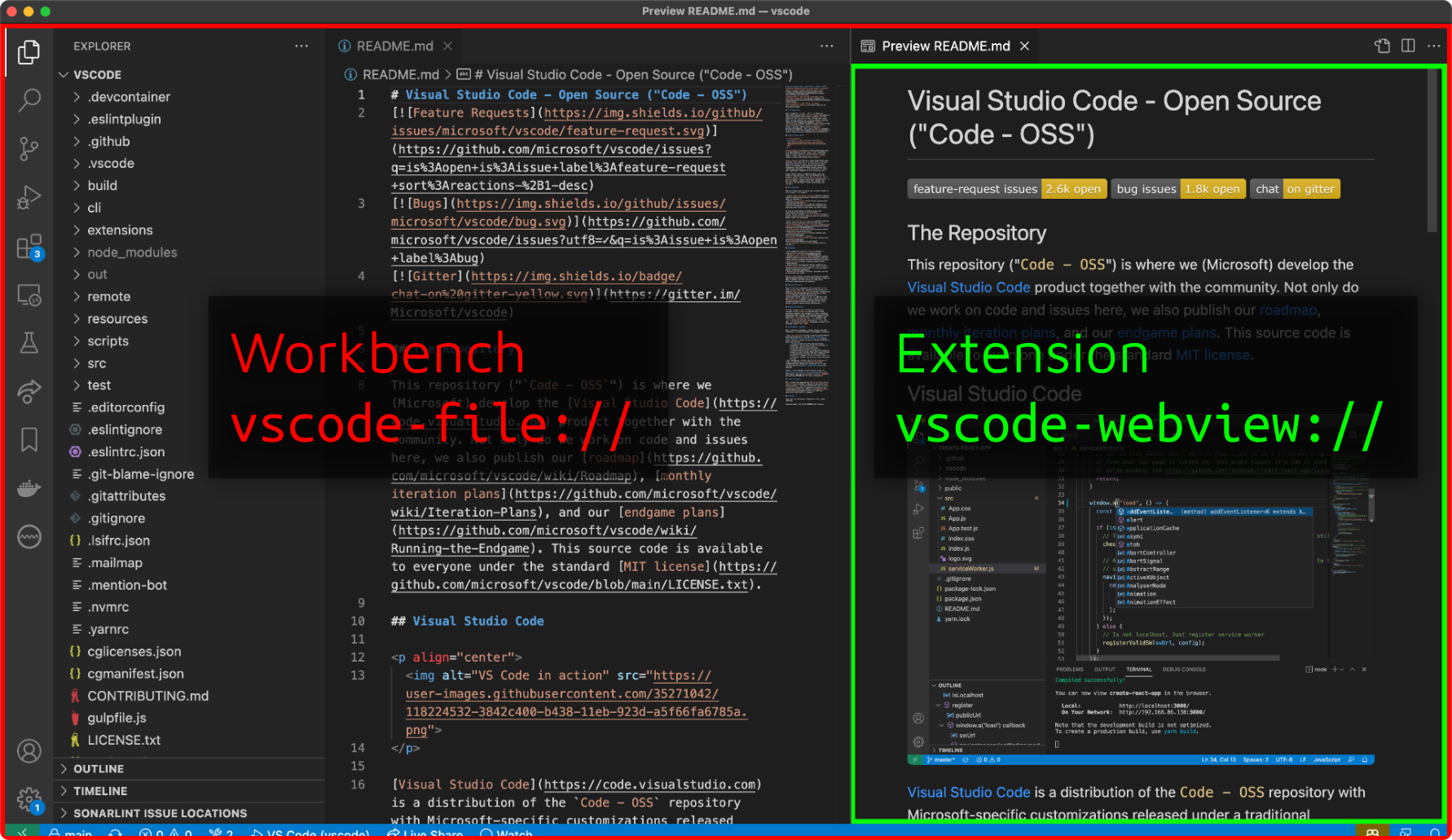

To ensure proper security of the whole IDE, VSCode splits its functionality into different processes. It inherits this process model from Electron, which separates the UI into renderer processes while the OS-level code lives in the main process. The renderer processes are less privileged as they run in the bundled Chromium browser, and the main process is a privileged Node.js application that can directly interface with the OS.

VSCode has not only one but multiple privileged processes. These include the main process that starts and orchestrates all the other processes, the shared process that hosts things like PTYs and file watchers, and the extension host process that runs the privileged part of built-in and third-party extensions.

As mentioned before, the UI is less privileged because, as usual for websites, the UI can't directly write files or spawn child processes. It is confined to a safe set of APIs that the web browsers expose. These less privileged parts must use message-passing interfaces to communicate and integrate with the rest of the application.

Since some of the actions that an IDE's UI will have to trigger will always be security relevant, such as saving a file to a user-specified path or running a build command, the UI is split even further into different parts:

The main UI, called workbench, provides the standard set of VSCode features and can order the privileged processes to perform actions such as saving a file. The workbench does not render user content to make Cross-Site Scripting attacks less likely. If user content has to be rendered, it is usually done in unprivileged webviews that cannot talk to the privileged processes. Since this happens inside a web browser, the Same-Origin Policy also applies and enforces strict isolation.

To communicate between different UI parts, developers can use the regular postMessage() API known from the web. If the UI wants to talk to other processes, they can either do this with MessagePorts or use a preload script and Electron's contextBridge.

Exposed Network Services

Let's start with something that is a significant source of bugs that keeps on happening now and then: exposed network services. Indeed, extensions often need it to communicate with other binaries or components on the system or even with VSCode itself.

In a perfect world, everybody would be using the right IPC mechanism that wouldn't rely on the network (pipes, UNIX sockets, etc.), but there are still a few rare cases where you need to expose something on the network, just not that often. It is also easier to rely on the network when building cross-platform applications.

The problem is that websites and malicious users on the LAN or the same host (e.g., a multi-user server) may be able to reach these ports. Even if that's only exposed on localhost, that would be considered the safest solution, external websites can trick browsers into sending requests to this service!

A good first example would be Rainbow Fart, an extension that plays sounds as you type in VSCode. It may sound like a silly feature, but this has over 135,000 installs in the Microsoft store. Kirill Efimov of Snyk found that this extension exposes an HTTP server on port 7777, without protection against CSRF attacks. Kirill also identified a ZIP-based path traversal on the endpoint /import-voice-package that allowed him to write files to arbitrary locations. That means that if you visited a malicious website from your browser, it could force Rainbow Far to deploy a new voice package and override files such as .bashrc in your home directory, helping the attacker to execute arbitrary commands on your system.

Another example of this vulnerability existed in the core. The Electron layer of VSCode was exposing a NodeJS debugger on localhost, listening on a random port. This was reported independently by two researchers (@phraaaaaaa and Tavis Ormandy, tracked as CVE-2019-1414). This is quite critical because if you can reach this port and "talk" to the debugger, it's its job to let you run arbitrary JavaScript code. This happens in a privileged context of the application, where one can simply import child_process and execute arbitrary commands. This is not as straightforward to exploit as Rainbow Fart, and likely impossible to exploit with recent browser mitigations against DNS Rebinding and access to local services.

Protocol Handlers

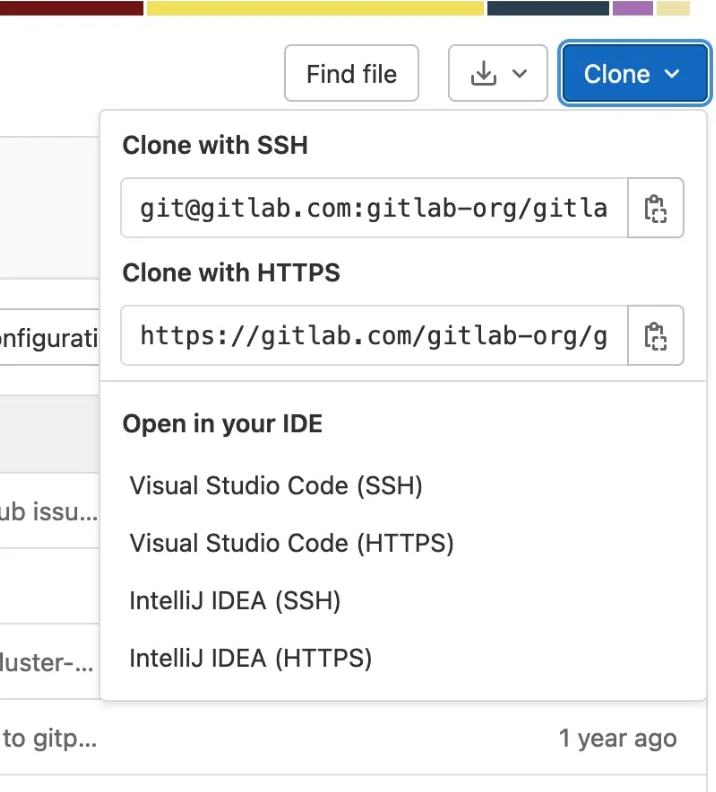

Now, we can spice things up and look into protocol handlers and deep links. This is a useful feature offered by Electron applications, as Electron provides this native layer that helps integrate with the operating system and its desktop environment. In a way, it's a form of IPC that doesn't rely on the network. In our case, both the core and extensions can register custom protocol handlers. Visual Studio Code has vscode://, and its nightly release has vscode-insider://.

Deep links have interesting practical applications, some of which you may have already used. For instance, it allows this "Open in your IDE" button on GitLab. Under the hood, this is simply a vscode:// link, and the operating system knows it should dispatch it to VSCode, and, here, to the Git extension.

We already covered the attack surface of the Git integration and the discovery of CVE-2022-30129 in Securing Developer Tools: Argument Injection in Visual Studio Code, so we won't describe it again here. For the pleasure of the eyes, this is how it looks like:

There was also a similar finding by Abdel Adim Oisfi (@smaury92) of Shielder in the Remote Development extension with CVE-2020-17148. This is a closed-source extension, so we won't share the source code here, but it was available under vscode://vscode-remote/. Behind the scenes, it calls SSH to establish the tunnel based on several parameters obtained through the deep link. Among them, the host is an important one and is inserted as a positional argument of the call to ssh: ssh -T -D [...] '<HOST>' bash. By crafting a link that tells VSCode Remote Development to connect to a host that starts with a dash, ssh is tricked into thinking this is an option. From here, options like -o allow overriding the SSH client configuration, and directives like ProxyCommand lead to executing an arbitrary command on the victim's system!

You can find all the details in Shielder's technical advisory, and we added this vector to our project Argument Injection Vectors.

Workspace Settings and Local Data

We can now cover something more specific to VSCode: workspace settings. The IDE supports per-workspace settings that you can commit along with your source code to share it with other developers. For instance, it can be helpful to share linter settings across a company, shortcuts, or even automated tasks. These settings are loaded when the folder is open, so you don't need to click on a special VSCode project to make it happen. Are there sensitive settings in these files?

And the answer is yes, absolutely! The first vulnerability we found on this topic was from the prolific Justin Steven in 2017, where he identified that the official Git integration has a setting to change the path to the git binary. This could point to the current folder, where a malicious binary could have been planted. There were a few pitfalls to overcome to exploit it successfully, but in the end, Justin did it!

Another example comes with CVE-2020-16881, found by David Dworken in the default NPM integration. This time, the extension extracts information from the local file package.json, if it exists. This manifest contains information about software dependencies, and the command npm can be invoked to know more about these: release date, description, version, etc.

There was an issue with how the dependency name was interpolated into the command line. At the time, it was directly concatenated into the command line, leaving the door open for attackers to inject additional commands:

private npmView(pack: string): Promise<any> {

return new Promise((resolve) => {

const command = 'npm view ' + pack + ' description dist-tags.latest homepage';

cp.exec(command, (error, stdout) => {

if (error) {

return resolve();

}

// [...]

});

}This was later bypassed by Justin Steven (again!), with CVE-2020-17023. To address the previous issue, Microsoft developers introduced a function named isValidNPMName() with the intent of detecting potentially invalid package names but did not remove the unsafe interpolation. The logic of this function is convoluted, as it does not even address the root cause of the vulnerability.

Another interesting fact about this validation function is that it would be a fail-open security mechanism: if it's not clear whether this is a dangerous function name or not, consider that it isn't.

private isValidNPMName(name: string): boolean {

// [...]

const match = name.match(/^(?:@([^/]+?)[/])?([^/]+?)$/);

if (match) {

const scope = match[1];

if (scope && encodeURIComponent(scope) !== scope) {

return false;

}

// [...]

}

return true;

}The main takeaway from these two vulnerabilities is that the IDE shouldn't trust information coming from either workspace settings or project files. Does that mean that we're doomed and that we can't get nice things in VSCode?

Workspace Trust

To tackle the risk caused by workspace settings and extensions trusting malicious data, Microsoft introduced a new feature called Workspace Trust in May 2021. The goal is to reduce the impact of malicious folders and establish new security assumptions. Untrusted folders are considered safe to open in restricted mode, and trusting a folder makes it inherently unsafe and a maliciously crafted project could abuse this to execute arbitrary commands on the host running VSCode.

This works by letting extensions declare at which trust level they can run. Ultimately, extensions that didn't properly go through a security audit before saying they could run with untrusted data can make it easy to get around Workspace Trust—including built-in ones!

We covered this topic in more depth with a vulnerability in the official Git integration in our previous publication, Securing Developer Tools: Git Integrations; expect new findings to be released on November 21st.

Cross-Site Scripting (XSS)

As mentioned earlier, VSCode is partially powered by a web browser, meaning many of the client-side bug classes usually found in the client-side code of web applications also apply here. The main one is, of course, XSS.

Since we already noted that some parts of the UI have to be able to trigger privileged actions in the other parts of VSCode, it becomes clear that XSS vulnerabilities can have even more impact than on regular websites. Additionally, the attack surface increases with each third-party extension users install because each can extend the UI. A typical example is an extension that renders a specific file format.

Let's look at two examples that showcase different ways XSS in a UI component can lead to arbitrary code execution on the system. The first example is CVE-2021-43908, which TheGrandPew and s1r1us discovered. To get all the nitty-gritty details, we highly recommend checking out their blog post and their DEF CON talk.

In short, they discovered that the built-in Markdown preview extension would let them use a <meta> HTML tag to redirect the webview to any website, such as their attacker page. While this doesn't give them access to any privileged APIs yet, it allowed them to run JavaScript that can talk to the postMessage handlers of the surrounding webviews.

That message handler responded to certain messages with the absolute path of the currently opened project. While this information is not too sensitive, it did form an essential part of the next exploit step. The workbench UI is loaded from a different protocol (vscode-file://), served by a custom handler. That handler contained a relative path traversal bug that would allow loading any file from disk via that protocol.

Since the exploit leaked the absolute path of the project folder in the previous step, the attackers could load a malicious HTML file under the privileged workbench protocol. Loading attacker-controlled HTML from that protocol now allowed them to use Node.js's require function to load the child_process module and execute arbitrary commands.

There were other vulnerabilities, such as CVE-2021-26437, discovered by Luca Carettoni, that could be exploited with a similar approach of escalating privileges by going up the webview tree and finally ending up in the privileged part of the UI. However, there exist also other ways that XSS can cause code execution without needing to jump through multiple hoops.

One example of this is CVE-2022-41034, discovered by Thomas Shadwell. He also found a way to execute JavaScript in an unprivileged webview, but instead of going the postMessage() route, he just auto-clicked a command: link.

These links can execute VSCode-internal commands, which are not as powerful as OS commands, but they can lead to the execution of arbitrary OS commands when using the right one. In this case, the workbench.action.terminal.new command was used to spawn an integrated terminal with an attacker-controlled executable and arguments.

The existence of these command: links sparked our interest to look for their other uses in VSCode. We discovered more vulnerabilities while researching this feature, this time in third-party extensions. Stay tuned for next week's blog post to learn more!

Reporting to Microsoft

Now that we've seen a lot of ways that can lead to severe vulnerabilities in VSCode, we'll look at how to report them to Microsoft. The recommended way is to use the vulnerability disclosure platform of Microsoft's Security Response Center (MSRC).

We found it to have better legal terms than most bug bounty platforms, and we had a good experience using it. It is a centralized interface for all steps of the disclosure process, but you can always email MSRC if something doesn't fit into the usual workflow.

The first bug we found was the Git local-level configuration issue (CVE-2021-43891). We reported without expecting a big bounty, but Microsoft awarded us $30,000! Since we were surprised about the amount, we asked them why we got that much but never got an answer.

One year later, we discovered another Git-related bug. This time, it was the argument injection in the protocol handler (CVE-2022-30129). After the issue was triaged, we waited for the bounty decision and got… nothing! After asking them about the difference in bug bounty payout, we got an answer this time:

"Case X was awarded due to our error. VS Code extensions, including those built in, were moved out of scope for bug bounty awards August 2020"

We were surprised to learn that even built-in extensions are not in scope for rewards, even though they are shipped with VSCode and enabled by default. While not leading to more bounties, the entirety of our research got us on the MSRC Leaderboards in Q2 2022, as well as Q2 and Q3 2023.

Conclusion

In this blog post, we learned a lot about VSCode and its security landscape. We started with the code editor's architecture and then looked at five major attack surfaces, namely exposed network services, protocol handlers, workspace settings and local data, workspace trust, and XSS. We also touched on how to report vulnerabilities to Microsoft and our experience.

If you are using VSCode yourself, we want to emphasize that Workspace Trust is here to help. If you see the trust prompt, don't just click on "I trust" to get rid of the annoying dialog. We hope our blog post makes you think twice before trusting that random project you just cloned from GitHub! We like the principle of least surprise, so Workspace Trust is a very welcome addition to protect users.

Finally, we note that many developer tools are not built with security in mind. Many of them have to be retrofitted to meet today's security standards, but the burden of responsibility is still not clear. Is the user responsible for protecting themself, or should the tool be safe by default?

We will release two more blog posts that continue our series on Visual Studio Code's security in the coming weeks. Next Tuesday's article will cover vulnerabilities in third-party extensions used by millions. Stay tuned!