Table of contents

Introduction to the Model Context Protocol

Background and motivation

Overview of protocol architecture and key features

Integration workflows and common use cases

Security, privacy, and trust

Community adoption, ecosystem impact, and future direction

Conclusion - Unless we want the SonarQube MCP Server ending

SonarQube MCP Server

Start your free trial

Verify all code. Find and fix issues faster with SonarQube.

Get startedIntroduction to the Model Context Protocol

The Model Context Protocol (MCP) is an open standard introduced and open-sourced by Anthropic to streamline and clarify how context information is exchanged between clients, applications, and AI models. Created in response to increasing needs for interoperability, scalability, and transparency in multimodal and multi-agent artificial intelligence environments, MCP offers a concise solution for context management. By adhering to this protocol, developers and organizations can more reliably integrate complex model workflows, manage input and output requirements, and enhance the portability of context across ecosystems.

MCP’s foundation is grounded in the principles of open-source collaboration, extensibility, and robust security. The protocol supports seamless communication between AI agents, model APIs, and third-party integrations. Commonly used keywords within this context include open source AI protocol, interoperable AI standards, multimodal model input, model context management, LLM workflow integration, privacy controls, and context serialization, ensuring content alignment with top-ranking search results and natural language processing best practices.

Background and motivation

Anthropic’s decision to open source the Model Context Protocol (MCP) was driven by a critical need to address the growing complexity in artificial intelligence deployments, particularly those utilizing large language models (LLMs), multimodal inputs, and distributed agent architectures.

As AI technologies evolve and organizations accelerate their adoption, the challenge of managing context becomes more pronounced, often resulting in fragmented systems where proprietary approaches create interoperability barriers and expose platforms to compatibility problems and security risks.

The motivation for MCP lies in enabling a common standard that streamlines context management, ensuring that user prompts, session histories, and other contextual data are consistently formatted and easily interpreted across a wide range of AI platforms and services. By standardizing how context is serialized and exchanged, MCP minimizes friction between different models and agents, significantly enhancing transparency and providing developers and enterprises with a reliable, scalable foundation for collaboration and workflow integration.

This approach improves not only the performance and effectiveness of AI models, but it also addresses compliance concerns related to data protection, supports easier debugging of interoperability issues, and helps organizations meet regulatory obligations such as GDPR, HIPAA and securing AI orchestration.

By adopting the MCP, companies can future-proof their AI investments, foster secure and privacy-conscious data exchanges, and establish a higher level of trustworthiness in their deployments. The protocol’s open-source nature encourages community participation, accelerates innovation, and ensures that best practices in context handling become universally accessible, all of which are essential to maintaining central pillars in the rapidly growing AI ecosystem.

Overview of protocol architecture and key features

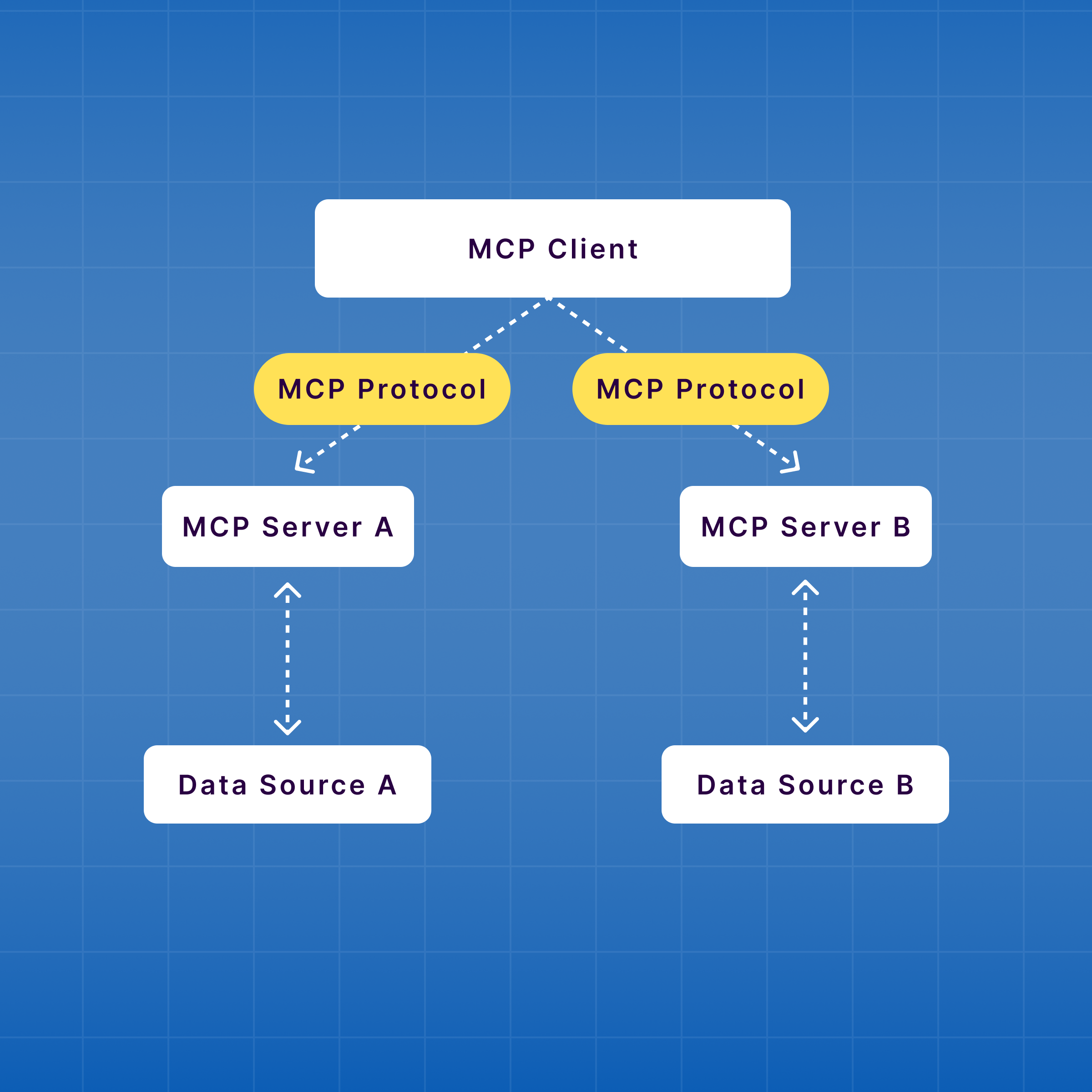

The Model Context Protocol is built on a modular architecture designed for flexibility, scalability, and secure interoperability for artificial intelligence systems. At its core, MCP defines a specification for how to serialize, share, and parse context information among AI systems. Context objects in MCP may include user prompts, session states, environmental variables, and metadata. Each context object can be annotated and validated for format consistency, security policies, and integration readiness, streamlining processes such as prompt engineering and multimodal input handling.

Key features of MCP include:

- Standardized context serialization: Ensures context objects are encoded and decoded in a way that is compatible across platforms.

- Extensible schema support: Facilitates the adoption of new context types, future-proofing integration with emerging AI models and modalities.

- Privacy and access control mechanisms: Context objects can be tagged for encryption and restricted access, supporting compliance needs such as GDPR and HIPAA.

- Flexible transport layers: MCP supports various communication protocols, including HTTP, WebSockets, and custom API endpoints, improving integration options for developers.

By providing these comprehensive features in a clear, logical structure, the protocol enables organizations to optimize AI interoperability, maintain high standards of security and privacy, and adapt rapidly to innovation while complying with industry best practices.

Integration workflows and common use cases

Adopting Model Context Protocol (MCP) is designed to seamlessly support integration workflows and deliver highly valuable, real-world use cases for both developers and enterprise organizations deploying artificial intelligence. One of MCP’s most significant strengths is its capacity to enable plug-and-play interoperability between distinct AI agents, large language models, and multimodal processing pipelines.

By implementing a standardized format for sharing context, such as user prompts, session histories, metadata, and environmental variables, MCP removes the technical barriers that typically hinder compatibility among proprietary systems.

This enables organizations to orchestrate multi-agent workflows, where separate AI systems communicate and collaborate, efficiently passing along contextual information required for complex, cross-platform reasoning and automation. Such workflows are vital for use cases in conversational AI, decision support systems, and advanced task automation, where retaining prompt history and session state across distributed agents leads to smoother, more accurate outcomes.

The protocol’s extensible schema support further enhances its utility by allowing organizations to adapt context objects for emerging AI models and new modalities as their technology needs evolve. MCP simplifies integration with plugin APIs and web services, making it possible to inject, retrieve, and persist context across diverse tools. This level of flexibility empowers developers to augment capabilities with retrieval-augmented search engines, persistent personalization layers, and custom knowledge graphs.

For example, in an enterprise scenario, MCP could be leveraged to connect virtual assistants, analytics bots, and customer service agents, all sharing relevant session context to deliver consistent, real-time insights and user-specific experiences.

Privacy and compliance stand out as foundational features within MCP’s integration workflows. Context objects can be encrypted, access-tagged, and subject to stringent retention policies, ensuring sensitive data remains protected and organizations remain compliant with major regulations like GDPR and HIPAA. This focus on privacy-first design allows for secure, ethical deployment of AI even in industries with heightened trust and data governance standards, such as healthcare, financial services, and legal tech.

MCP’s support for multiple transport layers, including traditional HTTP, WebSockets, and custom endpoints, makes it adaptable for both cloud-based and on-premises environments. This versatility is essential for organizations seeking scalable AI integration, whether deploying models in public cloud infrastructures or secure local networks. As a result, workflows built on MCP are robust, future-proof, and highly customizable, able to accommodate new integrations and evolving operational requirements without major overhaul.

By supporting these workflows, MCP streamlines omnichannel AI integrations and opens up innovative opportunities for context-aware search, personalized interactions, and knowledge management.

Security, privacy, and trust

Security, privacy, and trust at the core of the open source model context protocol (MCP), recognizing that these pillars are vital for robust and responsible AI integrations in modern enterprises and public-facing environments. The MCP incorporates advanced security measures that safeguard sensitive context data throughout its lifecycle. Each context object can be encrypted and annotated with access control policies, ensuring that only authorized users and systems are permitted to view, process, or transmit context information. This approach mitigates risks associated with data leakage, unauthorized access, and malicious manipulation, making MCP suitable for deployments where data integrity and confidentiality must be guaranteed.

Organizations implementing MCP can tag context fragments for retention, propagation, and downstream sharing, setting fine-grained rules about how context is stored, transferred, and eventually deleted. These privacy controls extend to audit logging options, enabling transparency in how data is accessed and modified, which is particularly important for high-trust sectors like healthcare, finance, and legal services. By allowing strict data governance and regulatory alignment, MCP empowers organizations to confidently deploy AI solutions without sacrificing user privacy or exposing themselves to legal liability.

Trustworthiness in the model context protocol is strengthened by its transparent, open-source approach and active community involvement. With the MCP source code publicly accessible and maintainable thorough documentation, it invites continuous security audits, reviews, and collaborative improvement from researchers and developers globally. This open participation accelerates vulnerability detection and timely patching, ensuring MCP remains resilient against emerging threats and maintains best-in-class security standards. The protocol further enables organizations to build trust with their users and stakeholders by demonstrating compliance, ethical data handling, and adherence to industry security benchmarks.

By embedding these security, privacy, and trust features deeply into MCP, this sets a new standard for context management in AI systems, giving enterprises, developers, and researchers the assurance they need to drive innovation in sensitive and highly regulated fields.

Community adoption, ecosystem impact, and future direction

The open-sourcing of the Model Context Protocol by Anthropic is paving the way for universal adoption in the artificial intelligence ecosystem. Tech companies, research institutions, and independent developers are increasingly utilizing MCP for scalable, reliable, and secure integrations. MCP is quickly becoming a baseline for interoperability in complex agent-based systems, multimodal workflows, and AI orchestration services.

Looking ahead, MCP is expected to expand with new schema extensions, improved support for multimodal (text, audio, image) context, and richer integrations with LLM platforms and open-source AI agents. The protocol is well-positioned to facilitate the next generation of context-aware AI, personalized model responses, and adaptive decision support.

Conclusion - Unless we want the SonarQube MCP Server ending

The Model Context Protocol is a breakthrough in the development of standardized, secure, and extensible AI context management solutions. Its influence is already visible across multi-agent workflows, LLM plugin integrations, privacy-centric enterprise applications, and open-source communities.

Overall, the MCP equips developers and organizations with a future-proof framework for context exchange, ensuring that as artificial intelligence evolves, interoperability, security, and trust are at the forefront of innovation.

SonarQube MCP Server

AI-assisted development is accelerating, but it raises hard questions about governance, security, and quality.

The SonarQube MCP Server answers them by creating a trusted, secure bridge between your AI agents and SonarQube, delivering instant, governed feedback right inside your AI-native workflow. Running locally as a Model Context Protocol (MCP) server, it gives AI tools deep, project-aware context, acting as a universal translator that standardizes how AI applications access SonarQube’s analysis, from checking code snippets for issues to verifying a project’s Quality Gate status. The result is a transformation: your AI coding assistant becomes a true code review and quality co-pilot that enforces your standards without forcing developers to context-switch.

In practice, this means you can manage issues across projects, automate quality checks in CI/CD pipelines, run on-demand analysis before commit, streamline issue triage, monitor project readiness, and, for teams with Advanced Security, surface Software Composition Analysis (SCA) findings—all through your AI agent. The server integrates with GitHub Copilot (including VS Code), Gemini CLI, Claude, Windsurf, Cursor, and Devin, and is available across leading MCP marketplaces. It’s completely open source, free, and deployable in minutes via Docker or by running the Java Jar; all you need is an active SonarQube Cloud or SonarQube Server instance. Start today to bring your trusted code quality standards into the era of AI-assisted development and lift your team’s software quality to the next level.