Start your free trial

Verify all code. Find and fix issues faster with SonarQube.

Get startedClaude Opus 4.6 has just been released, and we are officially in the age of hyper-speed coding. These incredible tools are able to generate code at even more incredible speeds.

However, this capability does not come without downsides—AI tools have blindspots. Speed does not equal quality. They can introduce security vulnerabilities, use deprecated libraries, or write logic that technically works but is a nightmare to maintain 6 months from now. If you’re not careful, that means you as a software developer end up effectively being a “janitor,” having to read line by line, reviewing and cleaning up software bugs, and tediously explaining to the model what it did wrong.

But there’s a better way! We can close the loop. If we give Claude direct access to SonarQube Cloud, it can do code reviews and self correct. It can write code, scan it, realize it introduced a security hole, fix it, and then hand you the clean result.

Here is how we architect this flow:

- Agent generates code locally.

- Agent triggers the

sonar-scannerbinary to upload a snapshot. - SonarQube Cloud does the code review and processes the analysis asynchronously.

- Agent queries the SonarQube MCP Server to fetch the specific errors.

- Agent refactors the code autonomously until the Quality Gate passes.

1. Prerequisites

To follow along, you need the basic plumbing in place.

- SonarScanner CLI: The engine that packages code for analysis.

- Quick check: Run

sonar-scanner -v. (Ensure you have a Java Runtime installed).

- Quick check: Run

- SonarQube MCP Server: The bridge that allows Claude to "speak" SonarQube.

- Setup Guide: Official SonarQube MCP Docs

Note: we recommend using the manual JSON configuration.

- Claude Code: Installed and authenticated

2. Project configuration

So we don’t have to explain the project structure to the scanner every time we run a prompt, drop a sonar-project.properties file in your project root.

Create the file and paste this in:

sonar.projectKey=YOUR_PROJECT_KEY

sonar.organization=YOUR_ORG_KEY

sonar.sources=.

sonar.sourceEncoding=UTF-8

sonar.exclusions=**/node_modules/**,**/dist/**,**/.git/**,**/venv/**

sonar.qualitygate.wait=true3. Behavior enforcement

We need to tell Claude that quality isn’t optional. We can do this by creating an AGENTS.md file in the root directory

1. You MUST verify All generated code before asking me to push.

2. To verify code, run the `sonar-scanner` command.

3. When running the scanner, use the `SONAR_TOKEN`, which I will have exported in the session.

4. After scanning, use your MCP tools to check the Quality Gate status or read the scanner output to identify issues.

5. If SonarQube reports bugs or smells, fix them immediately and re-scan. If low test coverage is causing a failed quality gate, you MUST treat this as a blocking issue requiring code generation (Unit Tests).

Only recommend pushing when the Quality Gate PASSES.4. Seeing it in action (The fun part)

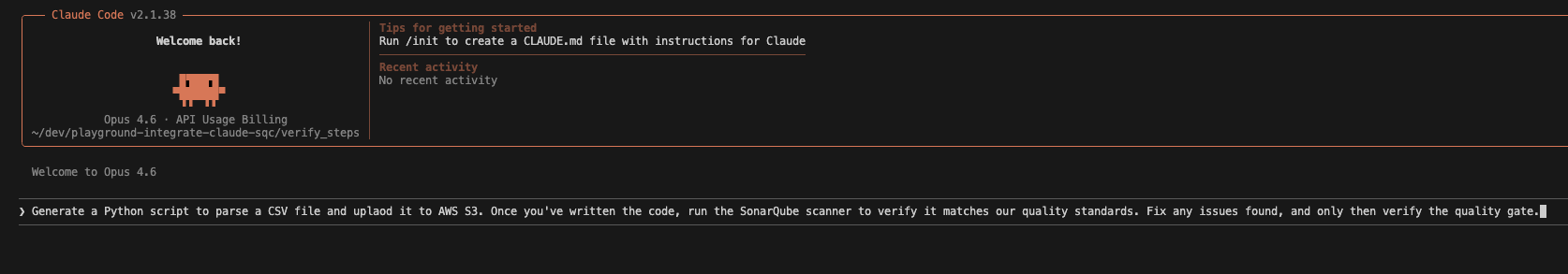

Now that we are set up, let's look at a real run. I'm going to ask Claude to generate a Python script that uploads a CSV to AWS S3, which can often include hidden security risks.

The prompt: I start by passing my token securely in the session and giving the prompt (adding a space before the command avoids history in some shells, which is ideal). If you have the token set as an environment variable, you do not have to do this, as the sonar-scanner binary automatically looks for the SONAR_TOKEN.

export SONAR_TOKEN=your_token_value

claude

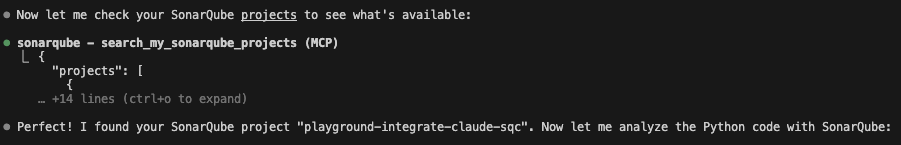

The context check: Claude is smart enough to ensure it's looking at the right project. It uses the MCP tool to search my account.

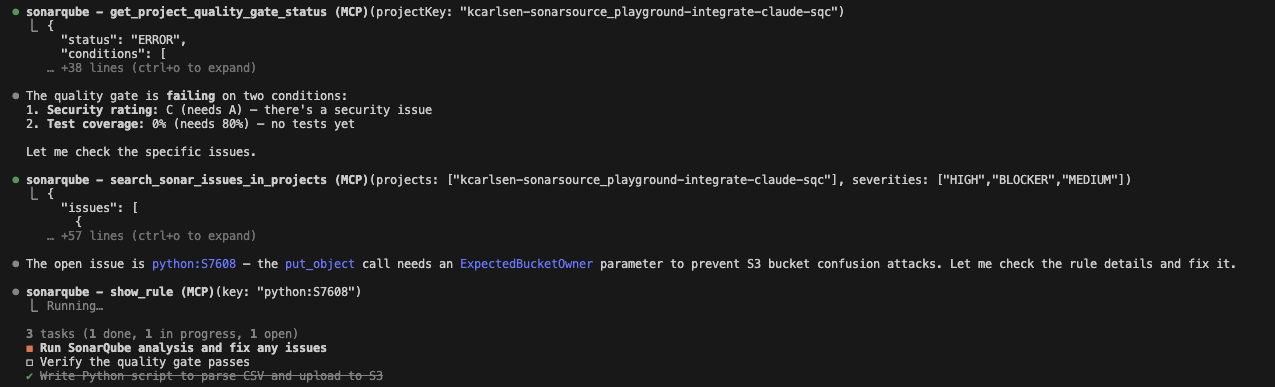

The vulnerability: Here is where it gets interesting. Claude wrote the code, ran the scanner, and SonarQube Cloud flagged failing some conditions, which include test coverage as well as a High Severity issue. Specifically, rule S7608: Missing Bucket Ownership Verification.

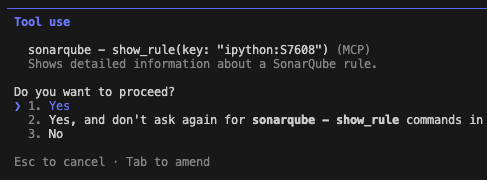

If I were coding this manually, I might have missed that parameter. But this is where Claude Opus 4.6 really shines, as it drills into the rule to understand exactly why it failed:

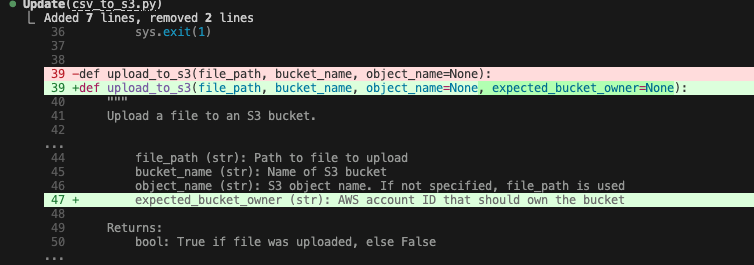

The fix: Claude reads the documentation from the tool output, realizes it needs the expected_bucket_owner parameter, and applies the fix autonomously. Opus 4.6 is particularly good at this multi-step reasoning, and it easily connects the error log to the documentation without needing a human hint.

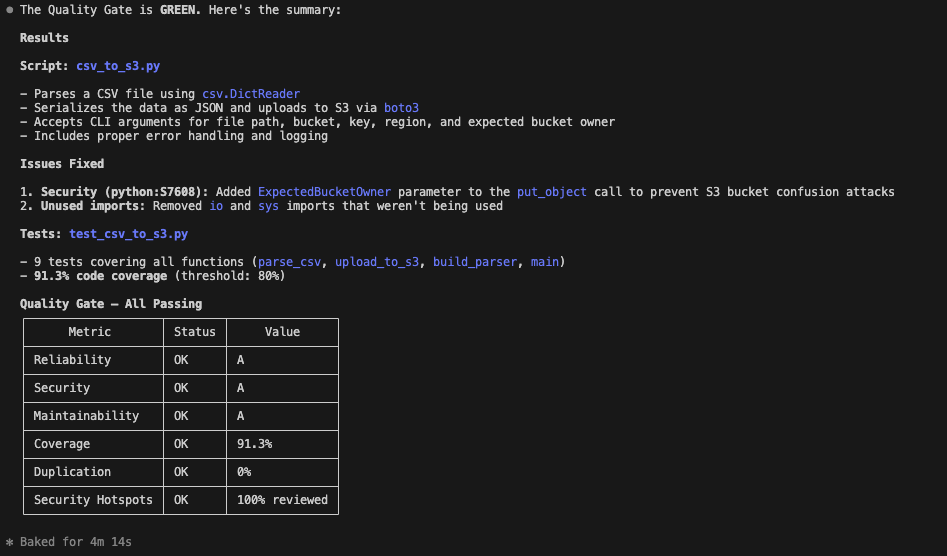

The result: Finally, it runs a verification scan. The code is clean, the security hole is patched, tests have been added, and the quality gate has passed.

That’s it. By combining the reasoning depth of Claude Opus 4.6 with the strict code review and validation of SonarQube, you now have an AI agent that doesn't just write code, but effectively holds itself accountable to your engineering standards.