Start your free trial

Verify all code. Find and fix issues faster with SonarQube.

CommencerIn the first five chapters of our State of Code Developer Survey report, we explored how AI has transitioned from a coding experiment to a daily professional necessity. We’ve examined the growing "trust gap," the sprawl of ungoverned tools, and the shifting nature of developer work.

In Chapter 5, we uncovered the reality of "the new developer toil." While 75% of developers hoped AI would reduce tedious tasks, our data showed that toil hasn’t disappeared—it has simply changed shape. Frequent AI users now spend nearly a quarter of their work week managing technical debt and correcting or rewriting unreliable AI-generated output. This effectively moved the pressure downstream to code management and verification.

This brings us to the sixth installment in our series, where we examine a critical tension in modern development: the tricky relationship between AI and code security.

Rising developer anxiety, stagnant preventative action

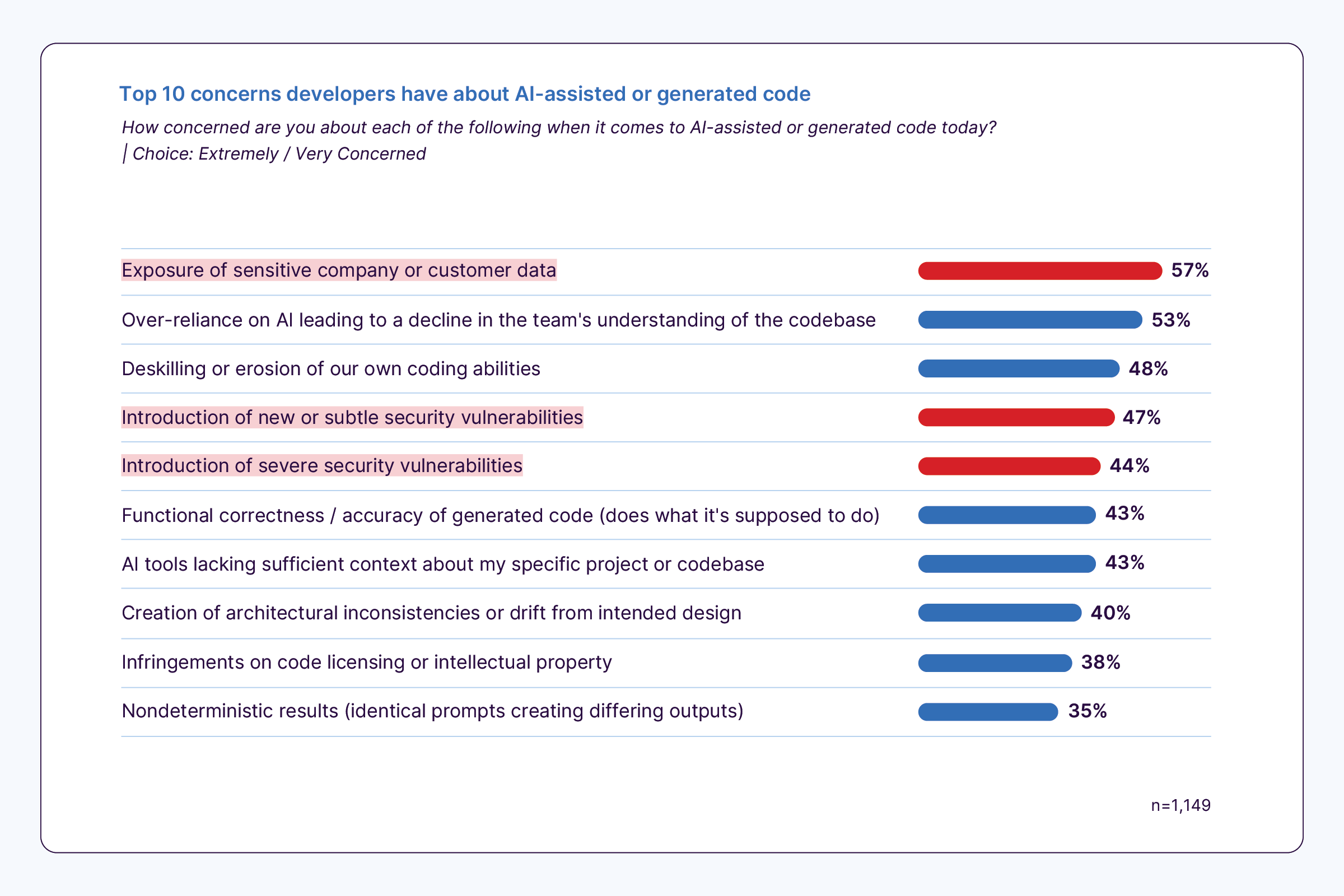

According to our study, the biggest concern developers have about AI code generation is security.

57% of developers worry that using AI risks exposing sensitive company or customer data

This is a significant majority voicing a clear fear about tools they are increasingly required to use.

However, there is a stark disconnect between this developer anxiety and overall action. Despite these high levels of concern, only 37% of organizations have become more rigorous about code security because of AI.

For leaders, this creates a massive blind spot. While developers are on high alert for new or subtle vulnerabilities (47%) and severe security flaws (44%) introduced by AI, the official governance frameworks are struggling to keep pace.

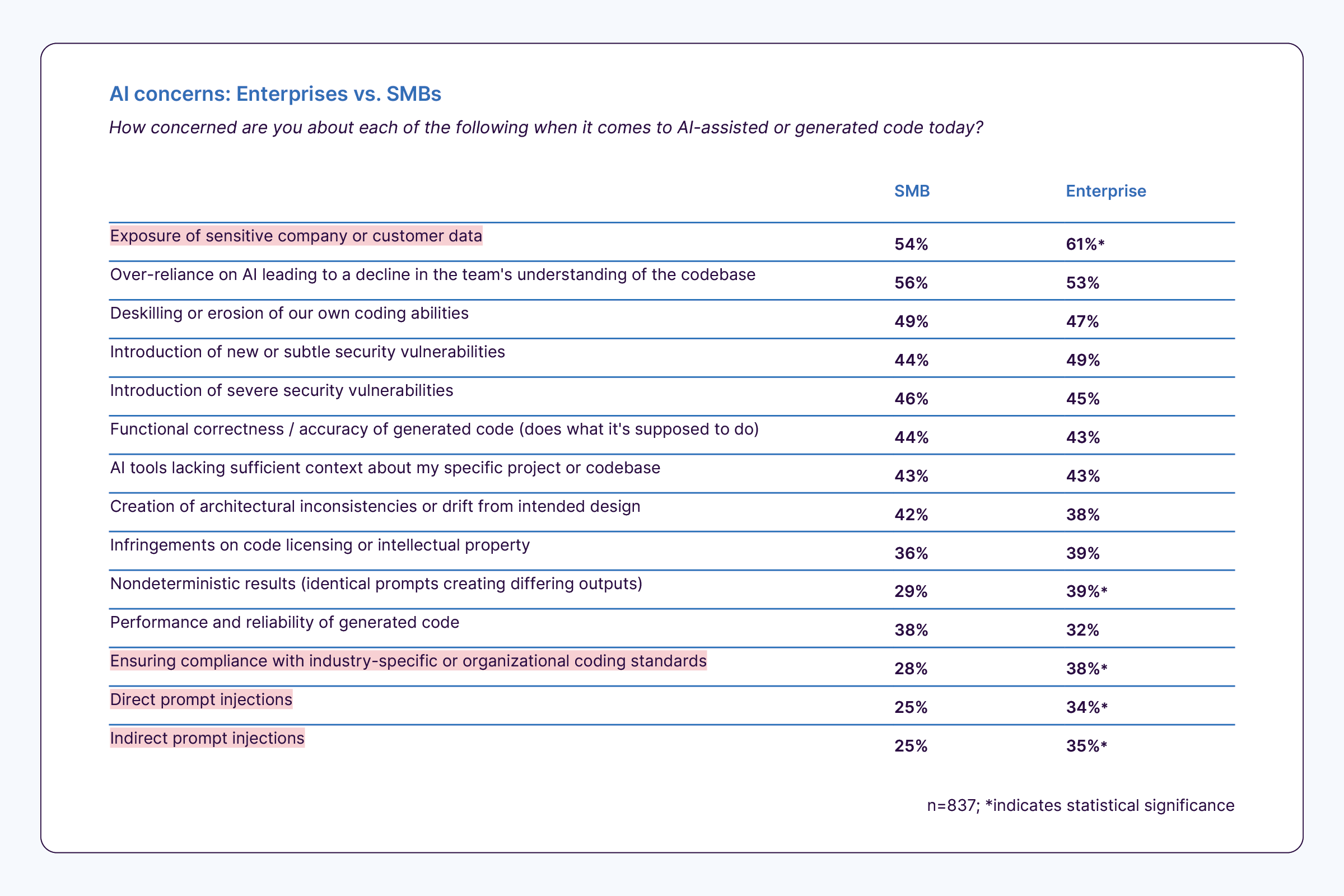

Big companies feel the risk most

These concerns are most acute in large enterprise environments.

In organizations with over 1,000 employees, the concern regarding data exposure jumps to 61%

Enterprises are also significantly more concerned than smaller businesses about specific, advanced attack vectors:

- Direct prompt injections: 34% for enterprise vs. 25% for SMB.

- Indirect prompt injections: 35% for enterprise vs. 25% for SMB.

- Compliance: 38% of enterprises worry about meeting industry-specific standards, compared to 28% of SMBs.

The trap of code that looks correct but isn't

Why is this risk so difficult to manage? The issue lies in the deceptive nature of the logic generated by LLMs.

61% of developers agree that AI often produces code that "looks correct but isn't reliable."

Unlike a syntax error that breaks the build immediately, AI can generate plausible-looking logic that contains hidden bugs or security vulnerabilities. This can create a false sense of security that leads teams to skip thorough reviews, essentially building a "security debt" that is much more expensive to fix once it reaches production.

This security debt is further compounded by the fragmented nature of the modern AI toolchain. On average, teams juggle four different AI tools, and 35% of that usage happens through personal, ungoverned accounts. This "bring your own AI" (BYOAI) culture means that even as organizations try to implement stricter controls, a significant portion of the code is being generated and handled outside the secure corporate environment, making centralized governance nearly impossible.

Moving toward a culture of verification

The takeaway for engineering leaders is clear: you cannot rely on AI to secure the code it creates. Only 28% of developers are currently using AI agents for security vulnerability patching, showing a lack of confidence in AI's ability to solve the very problems it might introduce.

To escape this paradox, organizations can implement a "vibe, then verify" approach. This means granting teams the freedom to "vibe"—to experiment and create boldly with AI—while maintaining a rigorous, deterministic framework to "verify" every line of code.

By integrating automated verification tools like SonarQube directly into the workflow, teams can ensure that the speed gains of AI lead to real-world quality and security improvements, rather than just faster-growing risk.

Read the full report

This security gap is just one part of the story. The full State of Code Developer Survey report covers the impact of AI on technical debt, agentic workflows, and the differing perspectives of junior and senior developers.