Start your free trial

Verify all code. Find and fix issues faster with SonarQube.

Get startedIn the first three blog articles of this series, we explored the new daily habit of AI coding, the critical trust gap between generation and correctness, and the rapid sprawl of "bring your own AI" tools within software engineering teams. We learned that while AI is accelerating the speed of writing code, it has created a dangerous verification bottleneck.

But the evolution of AI doesn't stop at assistants that wait for a prompt. In the fourth chapter of our State of Code Developer Survey report, we examine the next major shift in the software development lifecycle: the move toward autonomous agents.

The data suggests we have officially entered the second act of AI coding—where tools are no longer just passive helpers but active teammates capable of goal-driven action.

Agentic AI is moving from experiment to everyday tool

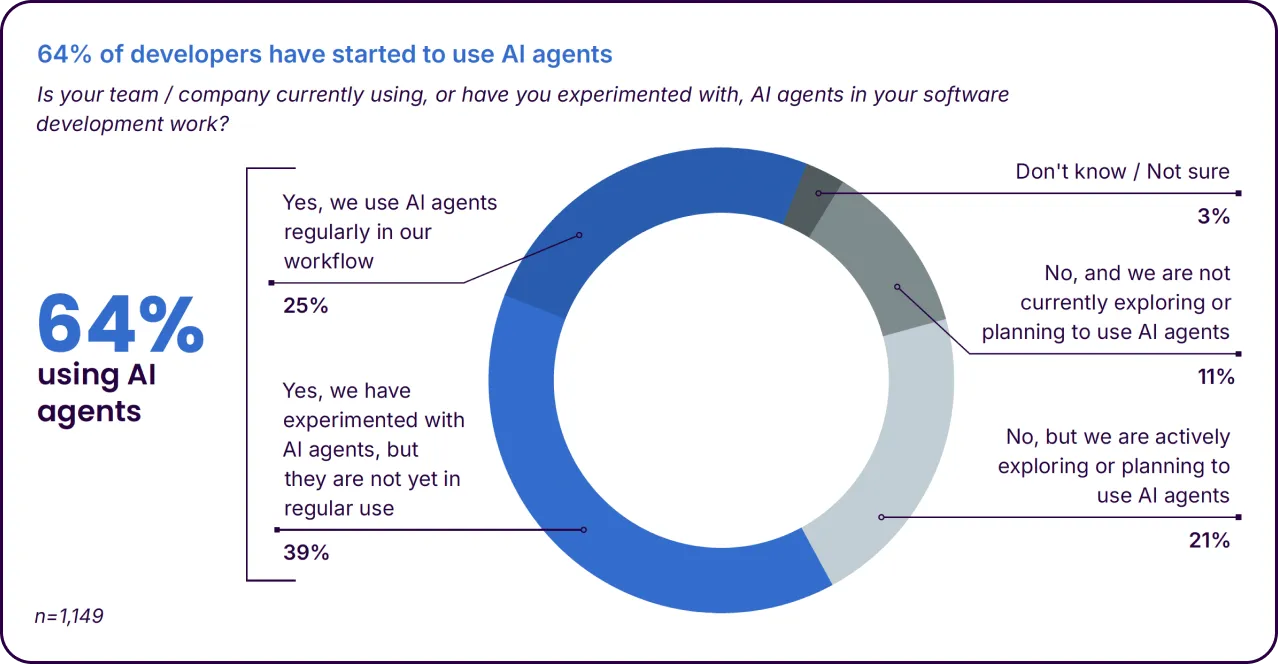

Experimenting with autonomous agents is quickly giving way to [in]formal integration. Our survey of over 1,100 software developers reveals that agentic AI has moved far beyond the "hobbyist" phase.

Currently, 64% of developers have started to use AI agents in their development work. This total includes 39% who have begun experimenting with agentic workflows and 25% of developers who now use agentic AI tools regularly in their daily professional routines.

This rapid adoption signifies a fundamental change in how work is orchestrated. We are moving from a model where humans perform every individual task to one where developers define goals and supervise autonomous systems that execute multi-step processes.

Use cases match AI’s natural strengths

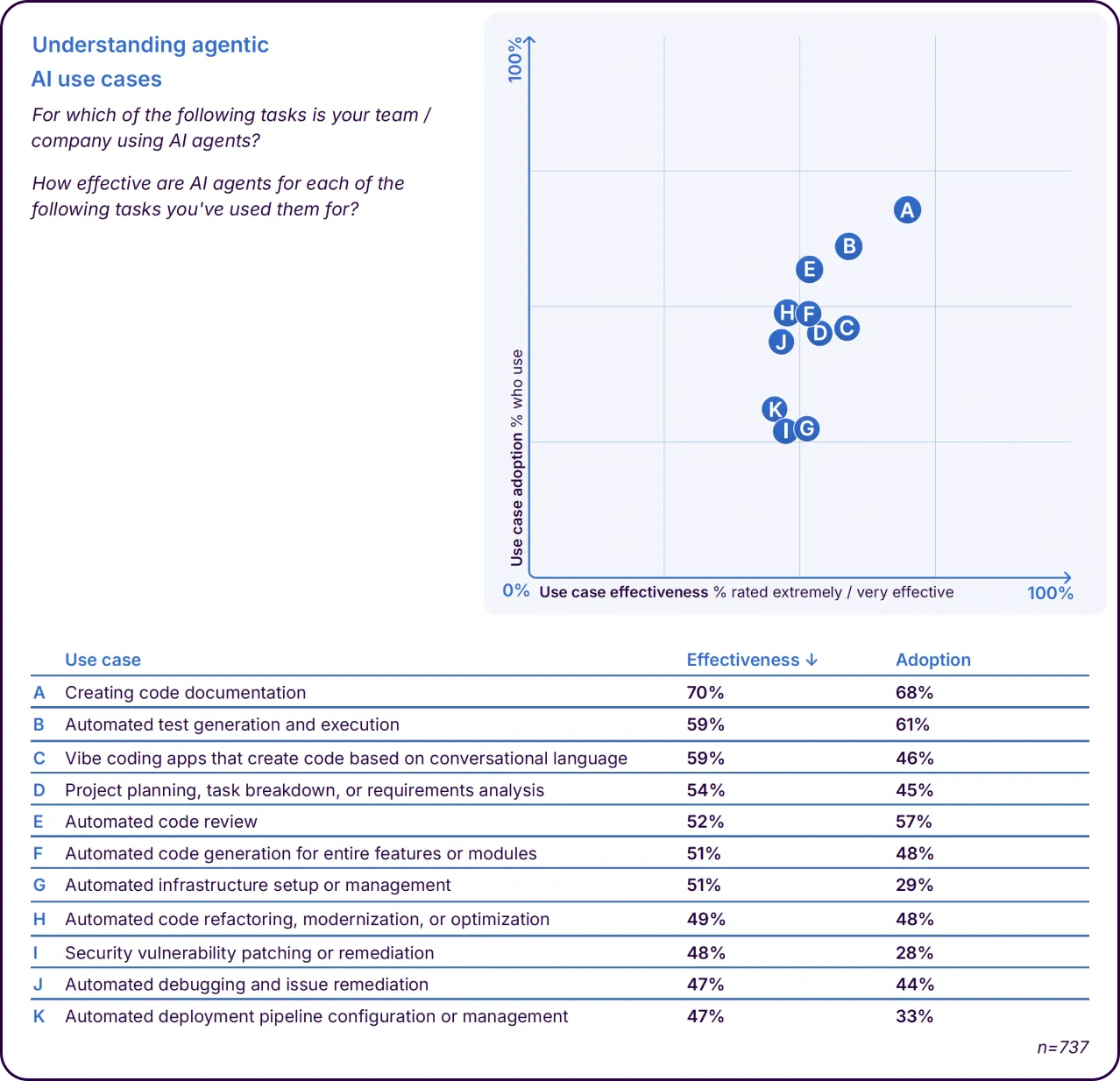

The data shows that developers are being pragmatic about where they deploy agents, focusing on areas where AI already demonstrates high levels of capability. Among the developers using agentic AI, the top use cases align with the natural strengths of large language models:

- 68% of developers use agents for creating code documentation

- 61% of developers use agents for automated test generation and execution

- 57% of developers use agents for automated code review

In contrast, high-stakes tasks like security vulnerability patching remain the least common use case, utilized by only 28% of developers. This selective deployment indicates that while developers trust agents to handle "toil" tasks like documentation and testing, they remain cautious about giving up control on mission-critical code security remediation.

The effectiveness gap persists

While usage is growing, the perceived value of these agents varies significantly by task. For example, 70% of developers rate agents as effective for documentation, which explains why it is the most adopted use case. However, only 52% find agents highly effective for automated code review.

This disparity suggests that as AI shifts from assistants to agents, the quality of the output remains a central concern. With basic AI assistants, there is always a developer in the loop to verify—or at least review—the output before it's committed. However, as tools become more autonomous, we face a new risk: the intent for verification can easily be overlooked in the rush to prioritize productivity. While developers are finding real value in automating the tasks they dislike most, they still see gaps in an agent's ability to handle the nuanced, complex logic required for deep review or maintaining mission-critical systems.

A powerful force multiplier for smaller teams

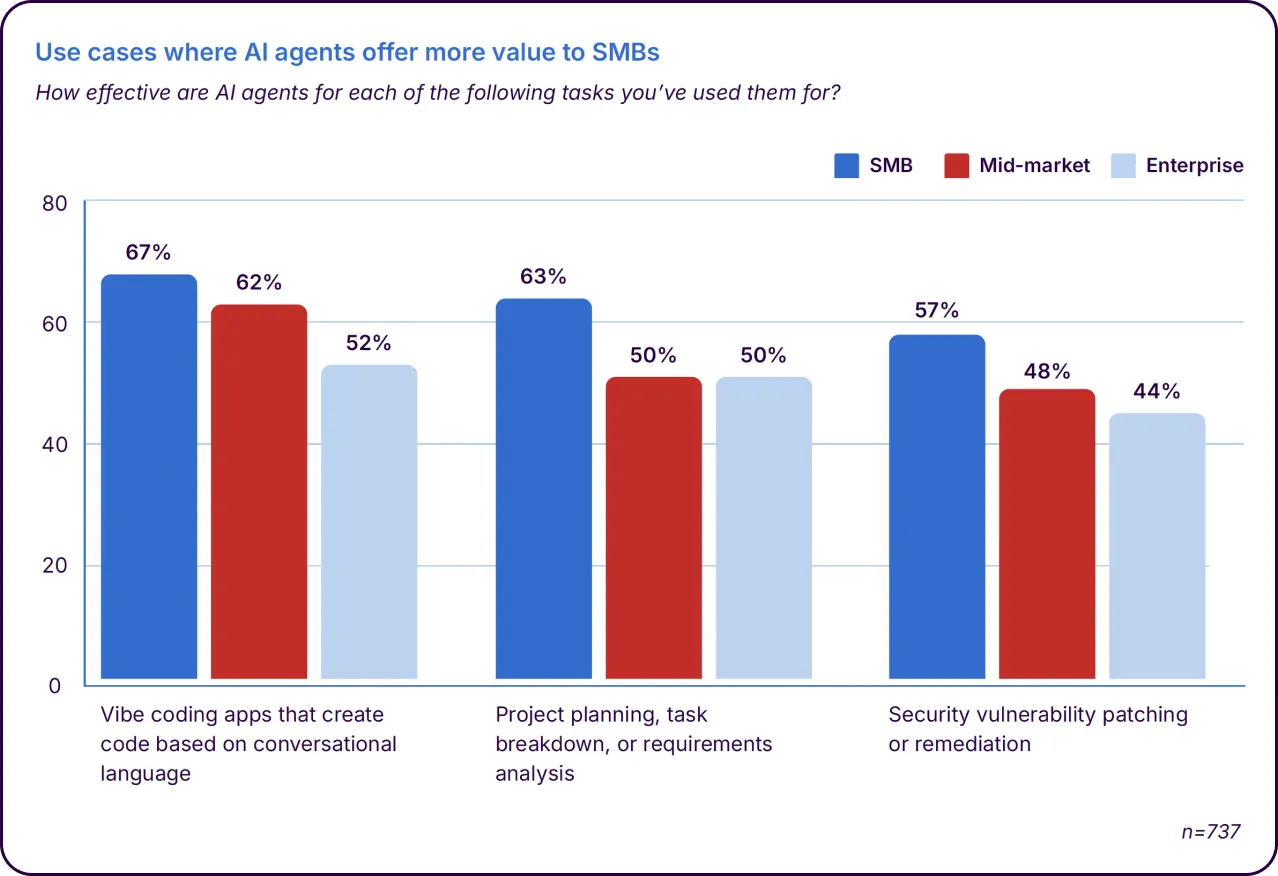

One of the most fascinating findings in Chapter 4 is how organization size shapes agent effectiveness. Small-to-medium businesses (SMBs) appear to be gaining the most immediate value from these autonomous tools.

Developers at SMBs report a 67% effectiveness rate for "vibe coding" tasks—using conversational language to create apps—compared to only 52% for their enterprise peers. This suggests that for smaller, agile teams, agents are acting as a significant force multiplier, allowing them to sprint ahead with generative tasks that might otherwise require more human headcount.

What this means for the future of code health

The move toward agentic AI reinforces the central theme of our research: generating code faster—whether by a developer or an agent—is only half the battle. As agents begin to contribute even larger volumes of code to your codebase, the need for an independent, deterministic verification layer becomes a strategic necessity.

Automating the "vibe" phase of development with agents only works if you have an equally robust, automated way to verify the output. Without guardrails, the second act of AI risks flooding development pipelines with unreliable code that simply moves the time-consuming work from "writing" to "fixing." Ultimately, autonomous agents require strict oversight and verification in high-risk operations. You wouldn't let a junior software developer work on mission-critical projects without oversight, and the same standard must apply to agents.

Ready for more?

The shift to agents is just one part of the story. The full State of Code Developer Survey report dives deeper into how these new workflows are impacting technical debt and the "engineering productivity paradox."