Code quality

How SonarQube minimizes false positives in code analysis below 5%

Read on to learn how SonarQube’s static code analysis engine works under the hood and the specific strategies that help it deliver accurate results.

Read article >

Exploring your current architecture with SonarQube

You have access to the accurate architectures of all your applications, that automatically refreshes during every analysis, with no configuration required.

Read article >

Automating quality gate success with Claude Opus 4.6 and SonarQube MCP

We’ve all been there: you push a feature branch on a Friday afternoon, convinced it is solid. You switch to the next task, only to get a notification twenty minutes later: quality gate failed.

Read article >

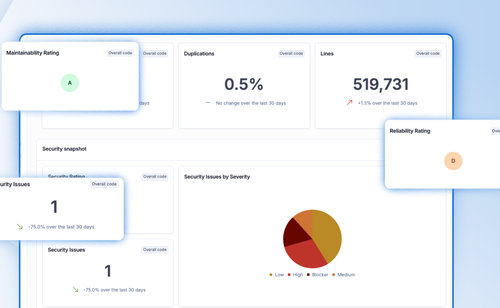

Using dashboards in SonarQube Cloud

Visualizing key code quality and security metrics for your SonarQube Cloud projects just became easier with the general availability of customizable project dashboards.

Read article >

Stop malicious packages in your CI/CD pipeline with SonarQube

“Malware”, short for “malicious software” has been around for decades, starting with the first computer viruses of the 1990s. Early malware was mostly experimentation and pranks.

Read article >

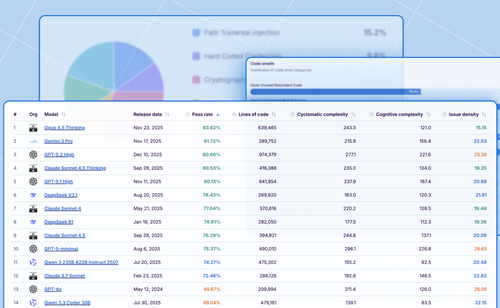

How to choose your LLM without ruining your Java code

When evaluating a new AI model, ensuring the code compiles and executes is only the baseline. Experienced developers know that functionality is just the first step; the true standard for production-ready software is code that is reliable, maintainable, and secure.

Read article >