Introduction

AI adoption is rapidly transforming the way businesses operate. A recent Bain study reveals that 87% of organizations have already deployed or are piloting generative AI, and adoption is rapidly increasing across all applications.

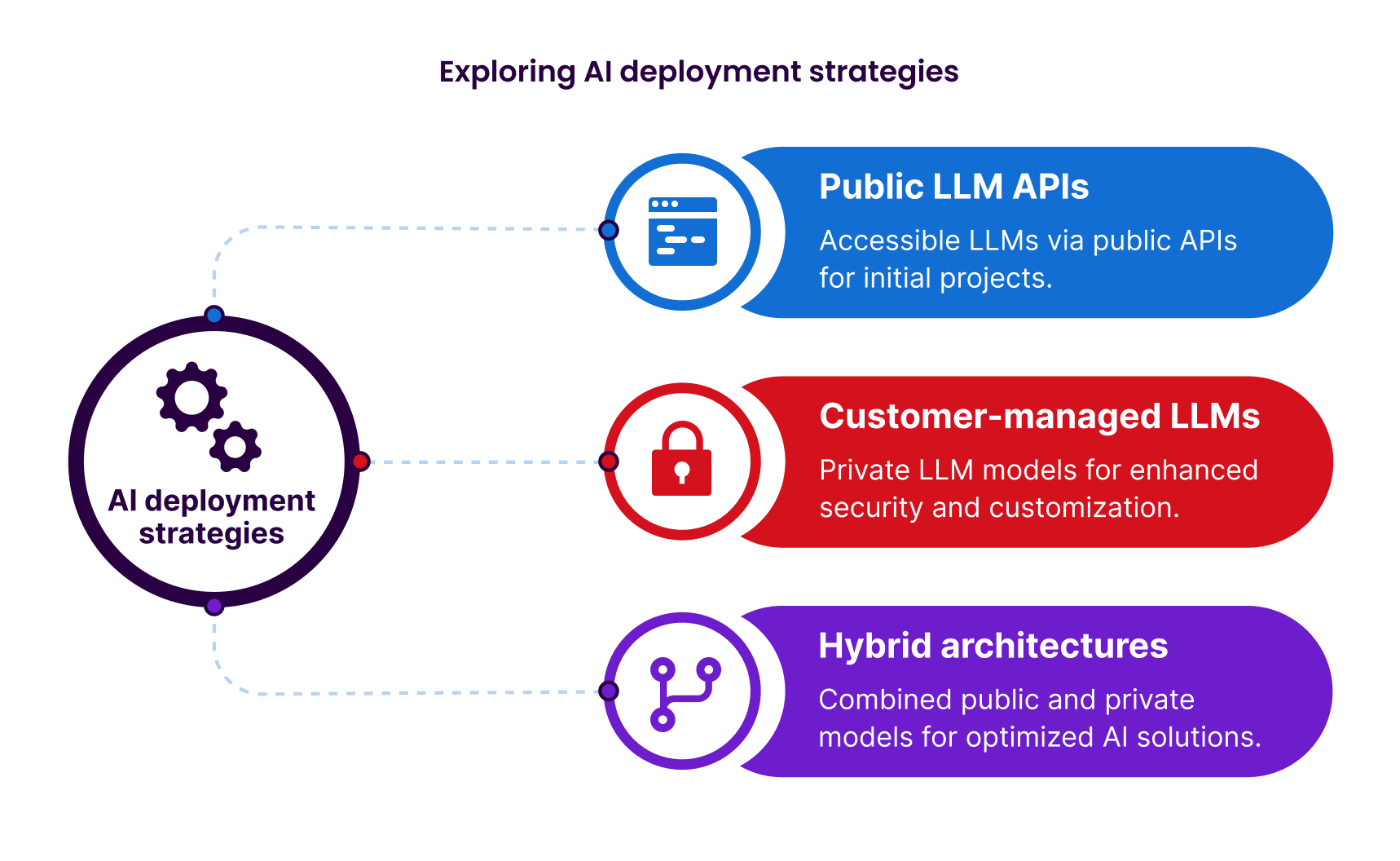

We're seeing adoption of powerful large language models (LLMs) is accelerating, driven by the wide range of deployment options now available. Enterprises can choose from public API services like OpenAI’s GPT-4o and Anthropic’s Claude 3.7 Sonnet, customer-managed solutions such as Azure OpenAI Service, or hybrid architectures that blend both approaches. This unprecedented variety gives organizations more flexibility than ever in how they put LLMs to work.

We'll discuss how to build LLM integrations that aren’t limited to a single provider, giving businesses the freedom to choose the best tools for their needs. We’ll explore the range of deployment models available, illustrate how different approaches can add value at various stages of the Software Development Lifecycle (SDLC), and show howSonarQube platform provides choice and flexibility. Our aim is to offer a practical, forward-thinking perspective on enterprise AI, where technology leaders and security experts work together to balance innovation with strong security.

Ready to dive into deployment options for LLMs? Let's break them down and see how flexibility enables companies to shape their own AI strategies.

A range of LLM deployment choices

Companies now have more ways than ever to use AI, whether that means relying on public models, running their own, or combining both. This flexibility lets them easily change their AI approach as needed. Each option has its strengths depending on the context, and our SonarQube platform is designed to support them all.

- Public LLM APIs: Many organizations start with public cloud AI services easily accessible via API. Models like OpenAI’s GPT-4o and Anthropic’s Claude 3.7 Sonnet represent state-of-the-art capabilities that are just an API call away. These public LLMs are trained on vast amounts of data and are constantly improving. That means you can use some seriously powerful language models without worrying about any of the technical implementation details. Public models work great for starting projects or when you need a lot of knowledge and creative ideas since you don't have to worry about hosting. They're awesome for things like figuring out what's needed, making rough drafts, or getting the gist of big documents. Plus, companies like OpenAI and Anthropic are always making their models better, so you always have access to the latest AI features.

- Customer-managed LLMs: Sometimes, enterprises want more control over how they use AI. With customer-managed deployments—like running OpenAI’s models through Azure OpenAI Service—you get the benefits of advanced LLMs within your own secure environment. Azure OpenAI provides the same GPT-4 model as OpenAI’s public API, but running within your Azure cloud account with all the privacy, security, and contractual assurances of your own subscription. This approach is perfect when data sovereignty, compliance, or customization are the top concerns. You might deploy an LLM within your virtual network, fine-tune it on your proprietary data, and enforce your organization’s access controls. The result is a private AI assistant that knows your domain intimately and adheres to your policies. Customer-hosted LLMs are especially useful for use cases like handling sensitive customer information, internal policy drafting, or any application where data control and privacy are essential.

- Hybrid architectures: Increasingly, enterprises are discovering that they can have it both ways. Hybrid LLM deployments combine public and private model strategies in intelligent ways, applying each where it makes the most sense. For example, an application could use a public API model for general knowledge queries or creative tasks, but switch to a private, customer-hosted model when dealing with confidential data or industry-specific queries. Development teams often use around 3-4 different large language models to cover all their AI needs.

The flexibility to choose between different LLM deployment options is great for innovation, but it also creates a challenge: how to ensure the code connecting your applications to these AI models is always high-quality, secure, reliable, and maintainable.

Integrating external APIs, managing data flows with internal models, and ensuring compliance across different systems can get messy. This is where Sonar comes in. SonarQube goes beyond just supporting different models or deployment locations. Our core goal is to ensure your AI-powered features are built on a solid foundation of code integrity and AI Code Assurance, no matter where the LLM comes from or how it's deployed.

So, what does Sonar really do for you when you're using LLMs? Here's the deal:

- Safety First: We check your code to make sure it's not accidentally sharing secrets or leaving doors open for hackers. This is super important whether you're using a public AI service or your own private one.

- Keeping Things Running Smoothly: Connecting your code to AI models can get messy. We find bugs and other issues that could cause problems, so your app doesn't crash unexpectedly.

- Innovate Faster, But Safely: Our automatic checks give developers the green light to try new things without sacrificing security. You can use the best AI for the job, knowing your code is solid from the start.

Sonar gives you this peace of mind at every step, whether you're using AI to brainstorm ideas or double-check sensitive info. We've got you covered.

Innovation and governance - a strategic decision framework

LLM deployment flexibility really helps leaders balance innovation and control. This is key for CTOs who want to push for the latest tech and CISOs who need to make sure everything is secure and compliant. CTOs might prioritize speed and new features to stay ahead, while CISOs are all about keeping things safe and avoiding risks. A flexible LLM approach lets both get what they need, making for a smoother process of bringing in new AI.

Having options for how AI models are deployed is crucial for maintaining security and compliance while still enabling innovation. Teams can choose the deployment method that best fits each situation—experimenting with the latest AI tools for new projects, while keeping sensitive workloads in secure, compliant environments. For instance, a tech team might pilot a new AI model for a customer-facing app, and if it proves successful or regulations shift, the model can be transitioned to a more secure, private environment.

This flexibility also helps organizations avoid vendor lock-in and the limitations of rigid solutions, benefiting both technology and security teams. It encourages collaboration and adaptability, which are especially important as generative AI continues to evolve rapidly. Experts agree that keeping your options open is the smartest way to stay ahead.

LLMs at every stage of the software development

Generative AI brings exciting flexibility to the business world, especially with large language models that can add value at every stage of the software development lifecycle.

LLMs can be useful right from the start, helping teams design API specs, create mockups, and outline code. They also make it easier to gather and analyze requirements, write user stories, and define acceptance tests, ensuring everyone is clear on what needs to be built.

LLMs are really helpful for developers. They increase developer productivity and satisfaction by automating routine tasks and accelerating the pace of coding.. With features like auto-generation and auto-completion, developers can write code faster and focus more on creative problem solving and strategic thinking. This not only shortens the software development cycle and speeds up time to market, but also makes coding more accessible for beginners, helping to democratize software development and drive greater innovation.

In the maintenance and evolution phase, LLMs can help identify areas for refactoring, and suggest improvements, helping developers to maintain software systems. These models can also play a crucial role in documentation. It can automatically generate documentation from code and provide context-aware explanations, thereby enhancing knowledge sharing and collaboration.

Freedom to choose, power to transform

Advanced language models like GPT-4 and Claude 3.7 Sonnet are reshaping what’s possible for businesses, but there’s no single right way to use them. Companies can choose from public AI services, private deployments, or a blend of both—whatever best fits their needs and requirements. The real advantage is having the flexibility to select the approach that aligns with your goals and policies.

At Sonar, we’re committed to supporting this freedom of choice. Whether it’s integrating a new model API that your developers are excited about, or deploying an instance in your own cloud because your compliance team requires it, we handle the connections and orchestration. Your focus can stay on innovation, while we ensure your AI infrastructure adapts to you, not the other way around. Every deployment option is valid, and all are welcome as part of your AI strategy.

Positive, forward-looking, and vendor-neutral, the future of enterprise AI is a landscape of choices. With the right support, those choices become opportunities for transformation. We invite you to explore this freedom, innovate boldly, and let us support you every step of the way on your journey to AI-powered excellence.

Together, let’s build the future – on your terms.

Start your free 14-day trial and explore for yourself.

Sonar's actionable code intelligence and AI Code Assurance capabilities ensure that all code, regardless of its origin, meets the highest quality and security standards, which is essential for building better software faster.