Start your free trial

Verify all code. Find and fix issues faster with SonarQube.

EmpezarIn our first post of this series, State of Code Developer Survey report: The current reality of AI coding, we explored how AI has officially evolved from a weekend experiment to a daily professional practice. Developers are using these tools across every layer of the software stack, from prototyping to mission-critical services.

But widespread adoption doesn't automatically equal trust.

In this second chapter of our State of Code Developer Survey report, we dig deeper into the developer psyche to answer a critical question: Do developers actually trust the code that AI systems are generating? The answer reveals a growing tension between speed and security that every engineering team needs to address.

Speed increases as code confidence plummets

There is no denying that AI adoption is accelerating the development lifecycle. Our data confirms that the perceived productivity gains are real.

82% of developers agree that AI tools help them code faster, and 71% say it helps them solve complex problems more efficiently.

Developers are feeling the boost in their personal productivity, and more than half report being more satisfied with their jobs as a result. However, this increased velocity has created a new, paradoxical situation. While code is being generated faster than ever, the confidence in that code hasn't kept pace.

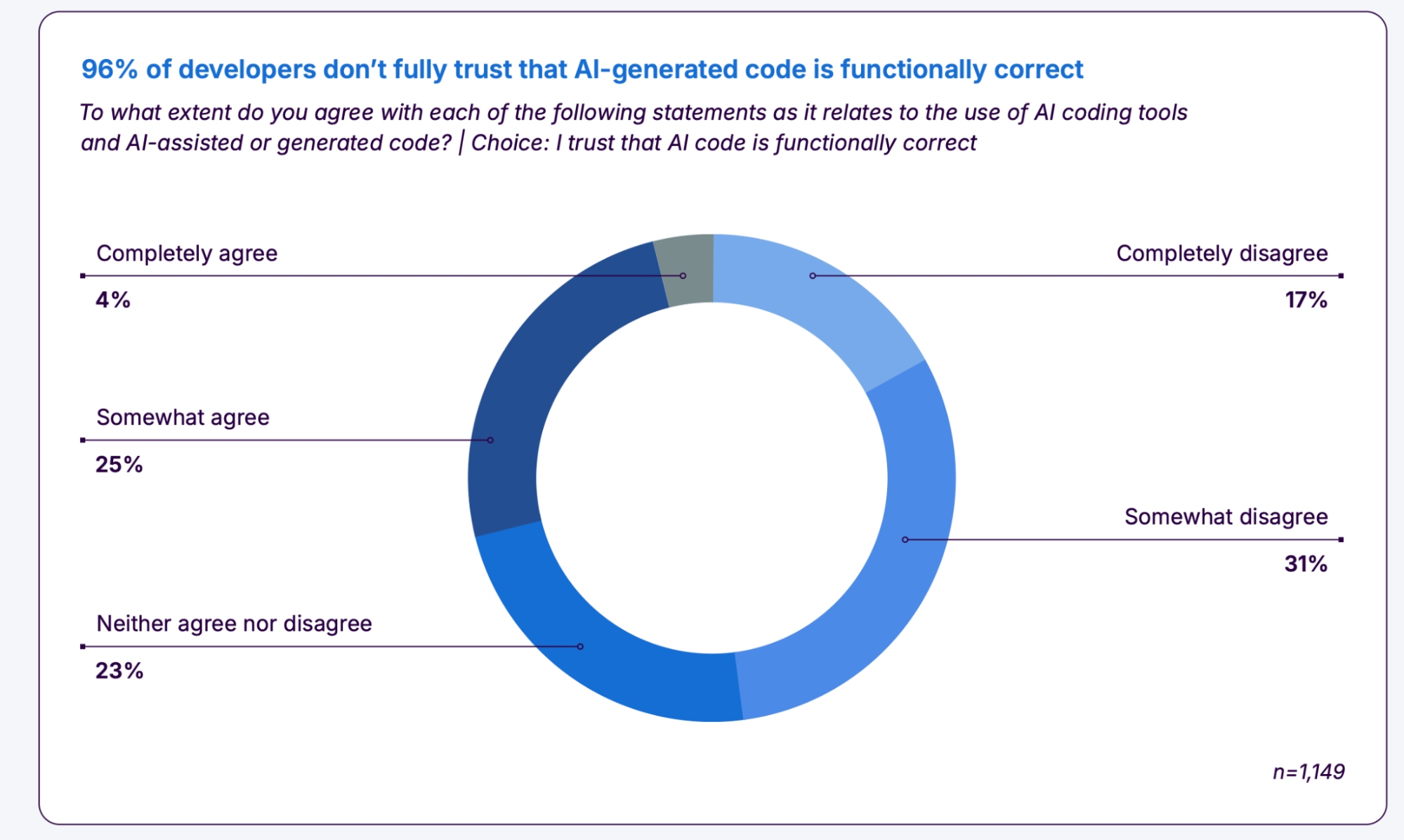

Our survey found a staggering statistic

96% of developers don't fully trust that AI-generated code is functionally correct.

This massive "trust gap" highlights a central conflict of AI coding. Developers are using these tools to move fast, but they are rightfully skeptical of the output. They know that speed is meaningless if the code breaks in production.

The AI code verification bottleneck

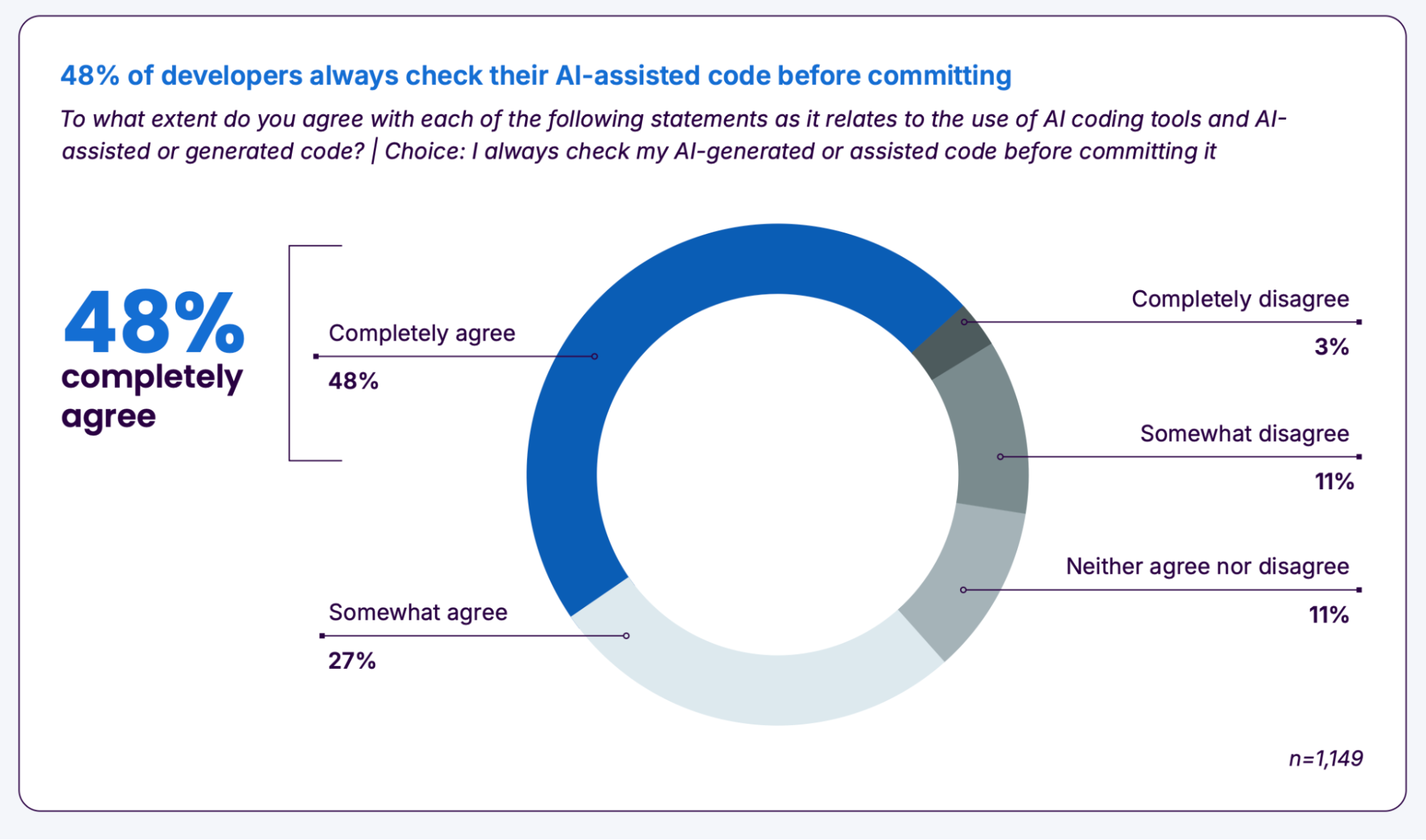

Given that nearly all developers harbor doubts about the functional correctness of AI code, you might expect that rigorous verification is the norm. Unfortunately, the reality is more concerning.

Despite the lack of trust, only 48% of developers say they always check their AI-generated or assisted code before committing it. In the rush to ship features, teams may be letting their guard down.

This is likely due to the fact that reviewing AI code is hard work. While AI is supposed to reduce toil, it often just shifts it downstream. In fact, 38% of developers say that reviewing AI-generated code requires more effort than reviewing code written by their human colleagues.

The problem of "looks correct but isn't"

Why is verification such a heavy lift? The issue lies in the deceptive nature of Large Language Models (LLMs).

61% of developers agree that AI tools often produce code that "looks correct but isn't reliable."

This creates a subtle and dangerous trap. Unlike a syntax error that breaks the build immediately, AI can generate plausible-looking logic that contains hidden bugs, security vulnerabilities, or hallucinations. Spotting these issues requires a high level of scrutiny and expertise, often more than is required to review human-written code.

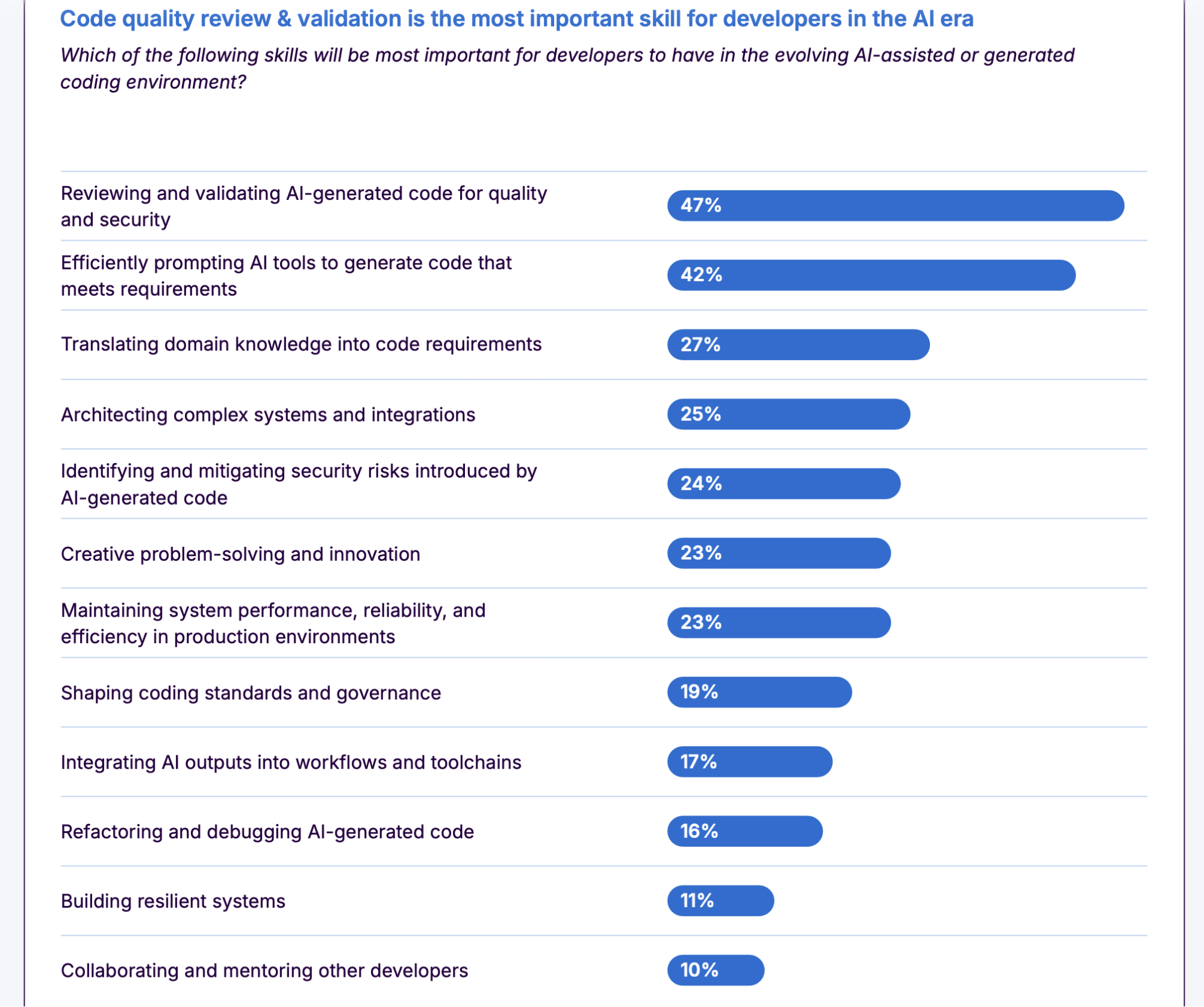

This effectively creates a bottleneck in the verify phase. The skill set required for modern development is evolving rapidly; developers now rank "reviewing and validating AI-generated code" as the number one most important skill for the AI era.

Read the full Developer Survey report

This trust gap is just one piece of the puzzle. The full State of Code Developer Survey report explores the consequences of this shift, including how it impacts technical debt, the emerging verification bottleneck, and the surprising differences in how junior and senior developers are adapting.