Vibe, then verify

Build trust into every line of code with SonarQube

Learn moreThe practice of modern software development has fundamentally shifted, prioritizing market velocity as the primary driver of software value. The adoption of Large Language Models (LLMs) and AI coding assistants has radically accelerated the development lifecycle, offering the potential for developers to achieve up to a 55% increase in productivity and complete tasks twice as fast. This massive boost in feature delivery speed is now a competitive imperative for top-tier organizations.

However, this acceleration introduces a fundamental risk: an Engineering Productivity Paradox. The immense velocity gain inherently increases the accumulation of code quality liabilities, specifically bugs, security vulnerabilities, structural complexity, and technical debt. This decline in quality is not due to developer negligence but is a matter-of-fact consequence of the speed and mechanism of AI code generation. Even as LLMs get better and better at the quality of the code they generate, the sheer volume leads to bottlenecks in manual code reviews and verification. With this shift towards velocity, a large growing and unmanageable mass of new issues are introduced in codebases causing a decline in overall quality. Accepting this decline is often viewed as a calculated and strategic trade-off for speed-to-market advantages.

The strategic objective is not to eliminate AI use, but to establish automated code review and governance mechanisms capable of managing and mitigating the quality issues introduced by increased code volumes.

Quantifying the code quality inflection point

The impact of generative AI is accelerating every phase of the Software Development Lifecycle (SDLC). While AI-assisted Pull Requests (PRs) reduced median resolution time by more than 60%, this throughput increase introduces a higher load on developers to perform reviews and exponentially increases the surface area for quality issues.

The mechanism of structural decay

Technical debt, defined by problems like high cyclomatic complexity, excessive duplication, and lack of maintainability, is rapidly accruing. This structural debt arises because LLMs prioritize local functional correctness over global architectural coherence and long-term maintainability.

Empirical evidence confirms this decay:

- Accelerated duplication: AI’s ability to generate functional snippets instantaneously creates a structural incentive for developers to accept quick, duplicated code rather than performing complex refactoring. GitClear’s 2020 to 2024 analysis tracked an 8-fold increase in the frequency of code blocks containing five or more duplicated lines, confirming a significant decline in code reuse. Furthermore, 2024 was the first year where the number of copy/pasted lines exceeded the number of moved (refactored) lines.

- Increased complexity: Cyclomatic Complexity, a metric correlated with maintenance difficulty, is generally higher in LLM-generated code. Since AI increases Lines of Code, Halstead Metrics, and Cyclomatic Complexity, the resulting increase of maintainability issues confirms the rising accumulation of structurally weak code.

The hidden cost of delivery instability

The escalating rise of code liabilities translates directly into an increased review and remediation workload. Data from a Harness survey indicates that 67% of developers reported spending more time debugging AI-generated code. This bloated, AI-generated code is inherently harder and more expensive to maintain and integrate.

Crucial evidence confirming the speed-quality trade-off emerged from the Google 2025 DORA Report, which found that a 90% increase in AI adoption was associated with an estimated 9% climb in bug rates, a 91% increase in code review time and a 154% increase in pull request size. This confirms increased latent defect density in high-velocity code.

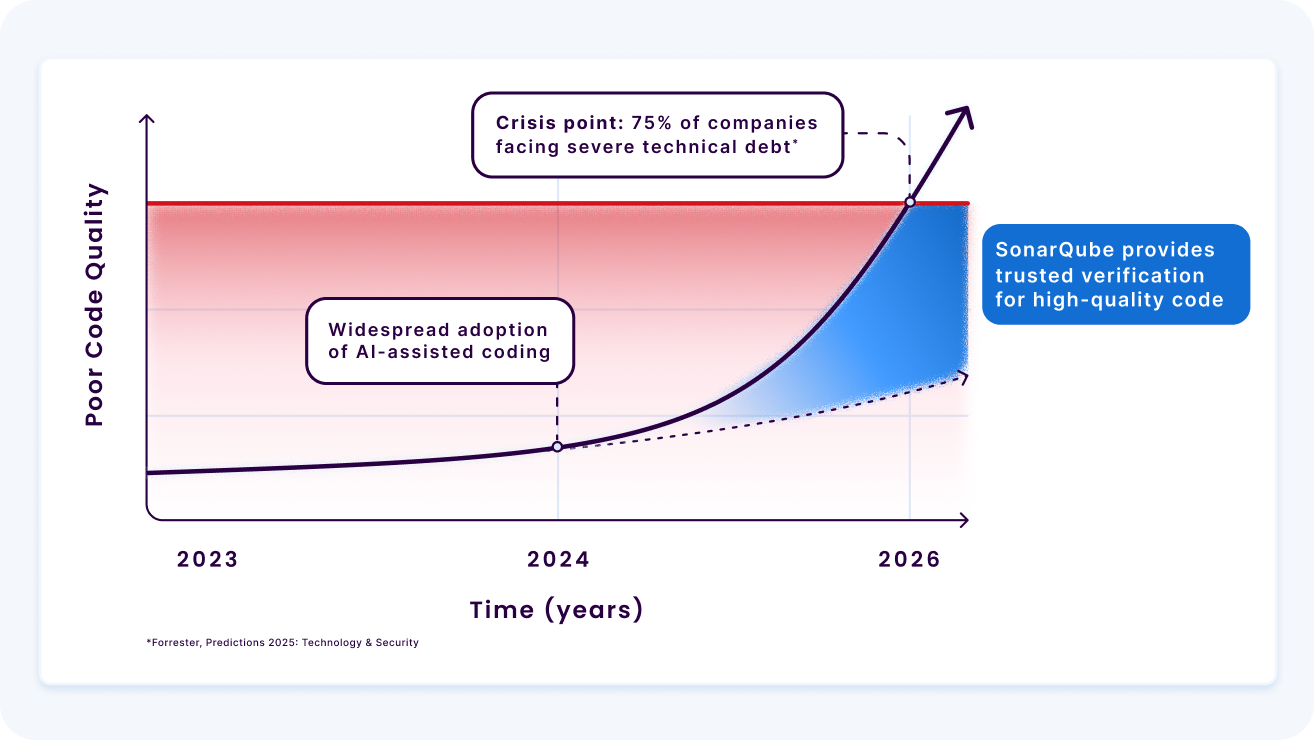

This convergence of data points and the massive duplication surge and the DORA stability drop confirms that 2024 marked a critical code quality inflection point where poor code quality accumulation began to accelerate exponentially as a percentage of overall codebase volumes across the globe. This coincides with the industry-wide adoption of AI-assisted coding practice. In their 2025 Predictions Guide, Forrester predicts that by 2026, 75% of technology decision-makers will face moderate to severe technical debt.

SonarQube is the industry standard trust and verification layer for high-quality code.

Resolving the Engineering Productivity Paradox is a strategic imperative

The exponential trajectory of technical debt creates a catch-22 scenario. Companies must adopt AI for competitive speed, but this adoption increases technical debt, requiring even more resources to manage.

The DORA amplification thesis

Google’s 2025 DORA Report introduced the definitive thesis: AI doesn't fix a team; it amplifies what’s already there.

- Teams with strong control systems (e.g., robust testing, mature platforms) utilize AI to achieve continued high throughput with stable delivery.

- Struggling teams, constrained by tightly coupled systems, find that increased change volume only intensifies existing coding problems.

This validates the core premise. The core issue is the lack of implementing tooling necessary to channel AI’s speed without suffering codebase degradation. To secure the massive productivity gains offered by AI, organizations must pivot from reactive manual reviews and debugging to proactive automated code reviews and quality gates that enforce quality integrity before code is merged.

SonarQube: the verification layer for managed acceleration

The accumulated technical debt generated during the high-velocity adoption phase will become structurally and financially unsustainable without automated code review and remediation. The solution lies in specialized, context-aware tooling that provides a "last mile" quality check.

SonarQube is the industry standard automated code review platform that directly addresses this crisis.

How SonarQube resolves the Engineering Productivity Paradox

The Engineering Productivity Paradox is resolved by transitioning from unverified usage of AI to managed acceleration. SonarQube acts as the necessary verification and trust layer by integrating code quality and code security checks directly into the development workflow, at every branch, pull request, and merge.

- Context-aware automated reviews: Standard AI tools often lack the necessary scope to detect subtle quality issues rooted in deep duplication. SonarQube identifies duplication, outdated constructs, and architectural inconsistencies across massive codebases, tasks previously too complex or expensive for human developers to perform manually.

- Mitigating decay metrics: SonarQube actively detects and remediates the structural issues (like high Cyclomatic Complexity and duplication) that mathematically decrease the Maintainability Index.

- Sustaining exponential gain: For elite teams, those with robust platforms and testing, implementing SonarQube ensures the high accrual of debt is proactively mitigated. This enables them to maximize velocity with controlled, predictable costs, achieving an exponential gain. By leveraging automated, context-aware AI verification systems like SonarQube, the trend of accumulating poor code quality bends downward.

The integration of SonarQube into the developer workflow shifts the future value proposition from raw output volume to intelligent code creation. By implementing rigorous code governance and specialized, context-aware automated code reviews, companies transition to managed acceleration and can reach sustained, exponential return on their technological investment.